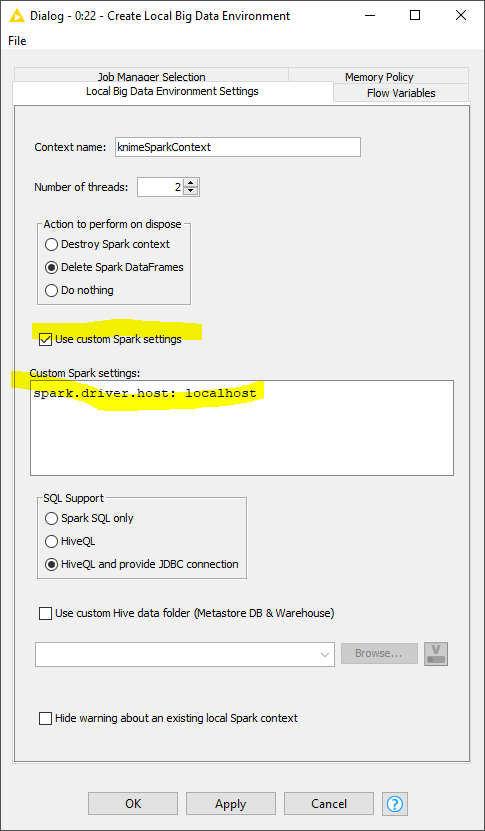

I didn’t see others with this issue but just in case it happens I ran into a snag when using the “Create Local Big Data Environment” as it was setting my local environment at 10.0.0.10 but that IP address was not directed to my localhost so all activities after the environment was created were timing out. This lead to error messages that were very general after running nodes based on that environment that just read “Job Aborted” or possible “due to Connection Timeout”. When I pulled up the Spark Web UI for example it would timeout as well.

Once it was clear this was not directing to my localhost I ran the following command to just create an IP alias and everything immediately started working. I am using a Mac OS (High Sierra) so the command is specific to that environment. I just thought I would post it in case it helps anyone else out. The key to identifying this I think is that the web UI will just show a blank page (because it can not connect to the provided IP address).

sudo ifconfig en0 alias 10.0.0.10 127.0.0.1

Cheers,

Chance