Hi,

I made a data model “DWH-like” with 3 fact tables that would Joined between themselves and with a series of dimension tables and datamart with the results waited. Now I have to use this data model for creating a KNIME dashboard (recreating here a dashboard created before in Qlikview, and after in PowerBI): but in BI tools as PowerBI (model view), QlikSense (Page Tables Associations), SAP Busines Objects BI (universe) we can use a graphic tools /semantic layer (between the parentheses of the product list we have the name of them) that allows to create quickly the relationship on the data model, without necessity of other DB manipulations. Does it exist in KNIME a sort of semantic layer/graphic tool o similar stuff or other possibility, for manifesting these joins / making this joins active and usable for KNIME dashboard, without create and adding a unique table in the DB with all the data of this data-model that would inflate uselessly with data yet present in the Oracle DB schema where we made the manipulation and we had the final data model made via KNIME ETL yet with all the data and all the necessary tables?

I assume you use KNIME Server because of this question.

As far as I know no. KNIME is not meant for creating star/snowflake datamodels with relationships. You can create fact and dimension tables but at the end those are then send to a database or Power BI/Tableau directly.

br

But I have to rebuild the dashboards PowerBI in KNIME, because we had constrained to abandon PowerBI because it cannot satisfy the needs of access in and out of the organization for which I am working at the moment. NB: The Fact Tables, the Dimensions Tables, The DataMarts are yet built via the ETL KNIME and have the same data yet verified in PowerBI which we have to abandon. Yes we have available a KNIME server. Now how can we use the datamodel created with all the necessary data and tables, used for the other BI Application in KNIME, without create another great table with all the data, inflating uselessly the schema ORACLE?

As I said I do not know another way as you already pointed out. Create one giant table with all the data and use that.

br

@DanGenEire typically for this kind of task you would have a database like oracle or Postgres or MariaDB.

How large are the datasets and are they currently stored in knime tables on the KNIME server? (you will have to grant special permissions for that).

The other thing I can think about is using a standalone SQL database like H2 or SQLite. That would depend on how large and complex the data is and if such a construct would be able to handle the load (how many people would access the BI?).

For the dashboard you are aware of Data Apps I assume?

Hi @mlauber71 and thank you for your answers and questions. For the project we use data from a Drupal application (that use a DB not E-R) stored in a MYSQL source and yet preprocessed in part for creating the first bases of data model: these data, for the policies of the organizations for which I am working, are transferred from mySQL to an ORACLE schema via a workflow KNIME: we do not use absolutely excel files or similar, we made direct transfer by the KNIME DB nodes. After with these data imported in ORACLE, we create the data model for the BI Tools, and for doing that, we use another KNIME workflow: when the workflow finishes its job, we have all the tables and data needed and generally potable for the BI Tools. At the moment the Oracle schema (dataset) has a size of 1 Gb, but I think, it will increase soon. When it will be fully operational , I think that 1500 users or more, in and out of EU, should have access to this KNIME Dashboard/Apps. Yes I am aware of the KNIME APPS and we would allow that the users would use them. Sorry, one think is not clear to me: when you tell about “the datasets and are they currently stored in KNIME tables on the KNIME server?”; What do you mean with that?

Kind Regards.

@DanGenEire OK I understand. We are talking about a serious task. One question would be to scale up the KNIME server/business hub and handle the logins.

The Data Apps would function as dashboards probably. I think they will require some planning and configuration. This might depend on what you are planning to do and how often the data will be updated. Would you take the data directly from the Oracle or would you store intermediate data within KNIME.

Maybe you can try to state again what it is the community might help you with.

Hi, yes is a serious task. For the moment we think to take the data directly from ORACLE for a data refresh that will be daily. But, if we could have some technical advantage to use the KNIME Dataset we could analyze this possibility and it depends also from the costs

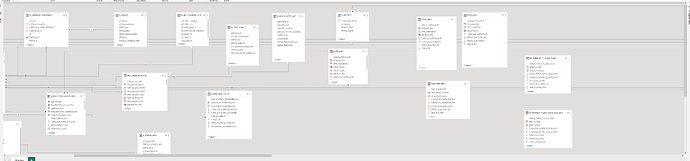

I attach the data model used in PowerBI and

On he data-model reproduced in KNIME I have all the necessary data and tables (a part some formula to add or some useful flag-field). Now I would try to know, from the community, what is the more efficient and “quick” method for using the joins of data-model for the dashboards results? What could replace a semantic layer as the BO Universe or the Association window of QlikSense or the relantioship window of PowerBI in KNIME dashboard? What is the better mode to avoid to create on this schema ORACLE a great table with all the data, that would occupy a lot of space on the schema, with the data that would be the same contained on the datamodel yet created in the ORACLE DB via KNIME ETL, renouncing in this mode too at the modularity and easy maintenance of data-model? Kind Regards.

Hi, You can close this discussion. I found as I can do something equivalent to Semantic Layer of other products, using the da manipulation node as DBjoin, Row Filters and column aggregation. Thank you to @mlauber71

What was the reason to cut PBI? Just curious

br

EMNIEs is a EC’s system for managing the return of illegal immigrant, with some help for favorizing theirs reintegration in the country of origin. This dashboard system should be available (always via an authentication and RLS) also for several ONG’s and similar entities in all the world, then also if out of EC and also if the e-mail addresses is not in Microsoft365. The policies of EC DIGIT restreints the access out of EC network with all the Microsoft products, PowerBI included. With KNIME these restraints policies are not present and they could grant the necessary accessibility to Member State and ONG, out or in the EC. Then for that I have to reproduce all the dashboards of PowerBI in KNIME and we would distribute them dashboard via Knime APP.