Hi there

Maybe it is just me, but I am puzzled about the memory management by hand and there is a very good reason, I cannot see yet. However, I would appreciate if, memory management were automatic. I am not sure, if all nodes are equally affected by memory shortage. I presume, it is mainly the node operating on the entire dataset, like “GROUP BY” and “SORTER”. An outline.

- KNIME estimates the memory requirements of each node before the start of said node. For this, it can take into account statistics of previous nodes leading to the node in question.

- It decides whether to run in memory or not. The decision can be garnered with the knowledge of already running nodes and their memory expectations. And if there is a setting where one can make KNIME wait to run a node until there are no parallel processes running, endangering the memory availability.

Maybe it can even be boiled down to starting everything fully in memory and when a node is running out of memory, either take disc in addition or move the intermediate results to disc and continue there or pause the node if parallel running nodes that use much memory are present.

I very much feel, my outline is a very rough one one can expand on.

Hi!

Not sure what information you are after but maybe I can share some facts and examples.

The memory management in KNIME is “automatic”. Most nodes are very “linear” and require only little main memory since they only perform a single table scan. Data is handled by some “backend”, that is columnar table backend or row-based backend. Both are topics by itself (a good intro the columnar table backend is in this blog).

More interesting nodes (because algorithmically more challenging) are the ones that require sorting such as the Sorter and – under some configuration – GroupBy and Pivot, or Similarity Search. These nodes are still somewhat simple since their memory consumption can be tracked while they run. A simple sort runs in batches: From the input it iteratively reads a large chunk into main memory (until memory consumption hits a limit), sorts that chunk in memory (memory is constant), writes it out (memory released), continues with the next chunk until the end; afterwards it merges the presorted chunks in a hierarchical fashion. If you then had two, three, many of these nodes in parallel, they would compete for the memory. Since the memory is observed while a chunk is built up, there is no memory shortage - it will only use more (but smaller) chunks, which may increase the number of table scans (increase I/O) and hence increase execution time. (In case you find this pattern often in your workflows and assume that it causes problems you can force a sequential execution by connecting these nodes via flow variable port - in most cases this will not be needed, though.)

And then there is a third category of nodes that operate all in memory. There aren’t many of those but nodes like a Random Forest Learner or k-nearest neighbor cache data in memory and they will do that until memory exhaustion (which is when that node will fail).

Again, not sure if that helps but hopefully it clarifies a bit how memory intensive nodes work internally.

3 Likes

@wiswedel Thanks for getting at this issue.

I have experienced memory related problems with my solution to the end of year challenge of KNIME It!

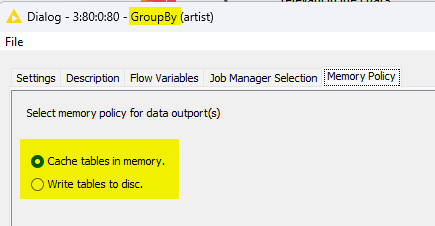

For the development, I used a 24 GB RAM desktop running Windows. I could run the entire dataset in memory there. For a presentation, I used an 8 GB RAM Ubuntu laptop. Marked Group By nodes failed.

After switching to disc memory policy, the simpler of the two ran through.

However, for the more “complex” (one more group column), I had to also reduce the amount of data.

I was just saying that I do not see any reason one has to switch manually. To me, a memory policy radio element would make more sense if one could select if one wants to automatically fall back to disc if memory fails or whether to fail immediately.

And I was expanding on ideas of how to prevent a fallback to the entire node process if one dynamically could switch to disc based caching while still running in memory when memory is getting scarce.

And thanks for the link to your blog post. Are there plans to not only be able to choose the general backend orientation but also the orientation for single nodes. While I immediately see the benefits for aggregation processes in using columnar data storage, I feel, in my small world, that joins or filters might be affected detrimental to columnar storage. Having said that, I guess one would appreciate tool support with testing the workflow on the combinations of row-columnar-storage because one would need to convert the data from one storage type to the other when the node requires so. Possibly, there are already test data showing that those conversions usually result in a time loss in the end.

Kind regards

Thiemo

1 Like

Hi Thiemo,

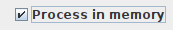

thanks for providing the workflow. I tested your workflow and then noticed that you (?) have set the memory option in the problematic nodes to “Process in memory”. This is not the default.

If I uncheck the box both nodes run through in almost no time (both in ~2 seconds).

Can you confirm?

– Bernd

PS: This option hardly ever makes sense to set unless you expect very few outputs/groups - in this case the data has 524k input rows and the aggregated data has 172k groups (=output rows).

2 Likes

Hi Bernd

Thanks for checking on the workflow. I did not compare the runtime specifics, as I was under the impression to do stuff in memory would always be faster than to cache on disc, especially if the disc is non-ssd.

I am not sure whether I understood your term “outputs/groups” correctly. My group by do indeed only group over one and two columns respectively. Maybe, you speak of the number of output columns? To me, coming from data warehousing, half a million rows is not that much. Then again, I presume, my hardware at home is not quite so powerful as at work where my feeling of data size was built.

I wonder why this option exists in the first place, then? If the in memory run only works for little data where the execution time is in the (fraction of?) seconds anyway, there is no real benefit, it seems to me. But in my case, reason for confusion.

Be it as it may, I still think it would be great if KNIME started all its processes in memory and if, shortage of memory is detected, nodes would automatically shift into disc caching, and if that does not work, after the node fail for lack of memory, KNIME would start from the failed node running only the failed node in disc mode.

In my case, either:

- GroupBy (artist) detects that RAM will not be available sufficiently

- Said node shifts its content in RAM to disc and continues and completes there.

or:

- GroupBy (artist) fails running out of RAM

- KNIME restarts the process at GroupBy (artist) node with that node in disc caching mode, and, if originally scheduled that way, continues with Column Renamer (column name gets …) in memory mode (if there was such a mode distinction anyway).

or a combination of the two ways above:

- as in the first way

- as in the first way, but if that fails for what ever reason too

- as in 2. of the second way

Kind regards

Thiemo

Dear Thiemo,

Most certainly we should hide this option better when the UI of the dialog is updated to the modernized frontend. And users familiar with database terminology would probably find it more useful to call the option “hash group by” (vs. “sort group by”), which is what this option really controls - but only a small subset of KNIME users will be able to make sense out of that.

In any case, it’s true that the node does not determine the best optimal plan on how to run the grouping. By default it applies a sorting to the input table based on the group definition and then determines the output. This is guaranteed to scale for large data and large expected result data (both in terms of numbers of rows). In case the data fits into main memory, it’s one scan over the input data [that’s a change made for 5.2, see changelog - AP-20550].

The “process in memory” option applies a “hash group by”, that is all groups and their aggregation is kept in main memory. It’s potentially more memory expensive if you can expect many groups (= output rows). (It’s important to note that the memory consumption of this grouping algorithm depends purely on the number of computed output rows/groups - so it scales well if you have really large input data but few output groups only, say a couple of thousand).

– Bernd

4 Likes