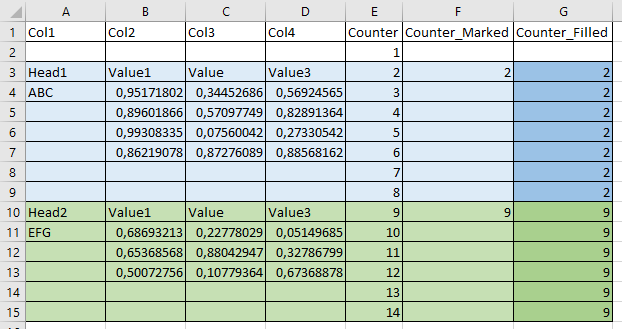

@willy_oracle typically you would mark all lines with a Counter . Then identify the ‘headers’ with a rule engine [IF Col1 LIKE “Head*” => Counter] and keep that (starting) row in a separate column. Then you fill the missings with the previous value so a block would emerge marking the data table:

So now you have the blocks marked an can use a Table Row to Variable Loop Start – KNIME Community Hub or Group Loop Start – KNIME Community Hub to do something with the blocks (export them delete lines with missing values …).

@mvaldes I tried a few things and came up with this. But I fear if your Excel files do not al have this exact structure it can get more complicated. You might try to better identify the headers of the column if they might shift. Also the two screenshots you provided do not seem to be from the same file.

So I think you will have to invest some more work.

[image]

Key to the approach might be to mark the blocks you have by a rule filter (what would a start of a table block look like). In this …

@Dalmatino16 with the help of the Counter Generation you could give the lines numbers.

Then you could use the Rule engine to save the number of the line if a certain condition is met and store the line in a separate column (var_start, var_end). Then only keep those columns and use a math node to calculate the start and length. Then convert that into Flow Variables that you can then use to steer your File import.

A somewhat similar example:

You mabye could upload 3 or 4 examples with varyi…

–KNIME and Excel — An Overview of What You Can Do | by Markus Lauber | Low Code for Data Science | Medium

3 Likes