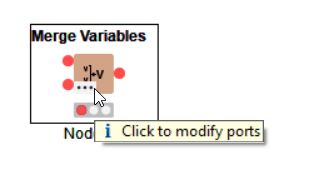

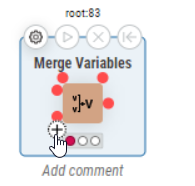

Hi @kevinnay, as you have said, you can use flow variable connections to control the flow. The answer to the problem of a large number of flows, is the Merge Variables node. You can dynamically add ports to it.

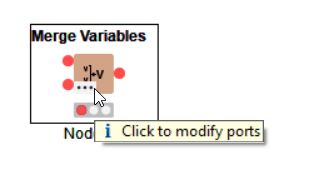

Classic UI

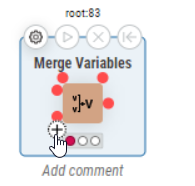

Modern UI

This can then be linked to your remaining node to ensure that the final GRANT only occurs after everything is completed.

You might event end up with some “modern KNIME art” like below!

Some thoughts though… Do you have multiple database connections? If so, great. If not, read on…

Parallel or “appearance of parallel” execution?

In the above mock-up I would doubt that my DB SQL Executors would actually run in parallel since they are all on the same JDBC connection, and JDBC drivers to my knowledge are normally “single threaded”. Therefore whilst they may all appear to be processing in parallel, (and KNIME will help with that illusion by producing an animated status bar on each of the nodes), each would be likely be blocking the thread and so they would actually be running serially with each DB SQL Executor waiting its turn.

I haven’t looked at all databases and drivers, so it is possible there are exceptions, but I always assume that a single db connection can process only a single statement at a time.

Multiple DB Connections

This may be the kind of thing you are doing already. The following would probably come closer to parallel execution. As I have 5 connections used by 10 DB SQL Executors, I’d expect there to be 5 statements now able to process concurrently:

So, the part where I attempt to answer the question you actually asked… the Merge Variables receives the flow from each of the “parallel” DB SQL Executors. Only once they have all processed, does it pass flow to the final DB SQL Executor, and then once that has completed, flow is passed to the DB Connection Closer which closes all of the database connections and keeps the DBA happy.

I’ve uploaded the above (runnable) demo workflows to the hub. They’re actually quite therapeutic to watch in modern UI