Using Knime 3.7.1 on windows the Parquet Reader node is periodically dropping column values. I noticed this first when using the node in conjunction with the Parallel Chunk looping, when several of the chunks would error out because column values were missing. When I reread the Parquet file it will load fine with all values. I just encountered the same issue when not running multiple parallel chunks, although my Parquet Reader node is still inside a Parallel Chunk loop (just running with 1 chunk). Seems like a bug caused either by Parallel Chunk running or just the amount of load on the CPU (my workflow is fairly beefy, running Spark Collaborative Filter Learning on a multi-threaded local big data environment).

I ha da similar issue with the Parquet reader on windows. It seemed as if it had stored values from a previous load and was still working with them. On one occasion several reloads helped and then to delete the reader and load a new one.

Something could be wrong with the reader, which is bad because the parquet format should be some kind of standard.

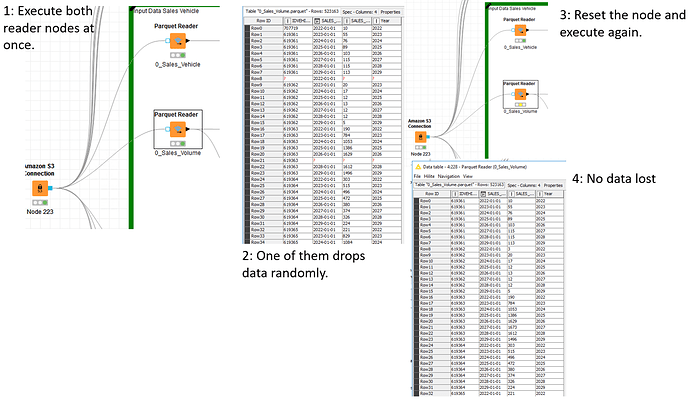

I encounterd the same behavior as well.This is how it looks for me. I assume there is also some hierarchy to it. It seems the one dropping data is always the node which started execution last.

Hi @JulianSiebold,

welcome to the community! And thanks for your example.

There is currently a bug regarding parallel execution of the Parquet Reader. We are aware of the issue and will try to fix this as soon as possible.

Until then you would need to execute the nodes one after the other. You can use flowvariable connections to force the execution order.

sorry about the inconvenience.

best Mareike

Checking back in to ask if there is an estimate on when the Parquet Reader parallel execution bug will be fixed? I am running into this issue quite frequently (in v3.7.2), even when not using a parallel chunk executor. Just having two (or more) branches inadvertently pull parquet files is something I keep needing to be cognizant of - and we are using Parquet for everything we do!

I just ran into another issue where I was using a Table Writer/Reader with AWS S3 because there is no way to map a PortObject with the Parquet Writer/Reader nodes. The Table Reader is timing out frequently when reading from an S3 Remote Connection, and I have to download the file first and run the Table Reader on the local copy to avoid the timeout. The frustrating part is that the Try-Catch I had the Table Reader in isn’t picking up the timeout error, so my workflow just stops executing with a warning on the Table Reader node. The workaround is easy (downloading the file), but it would be nice if (A) Parquet IO supported PortObject serialization/deserialization and (B) Table Reader was more stable with remote connections.

I just had two different workflows running on the same machine, forgetting that each had a Parquet Reader. In this instance, the Readers did not seem to cause each other to drop data. Seems the contention is only for Readers in the same workflow.

Nevermind - turns out the data was corrupted (cells dropped) in one of the workflows (didn’t inspect the other closely enough to determine if there was any corruption). Sorry.

Hi @bfrutchey

happy to hear that this resolved itself. In KNIME AP 3.7 there was an issue with parallel execution of the Parquet Reader node, but this was fixed in 4.0. See issue BD-912 in our changelog: https://www.knime.com/changelog-v40

If this reappears (in KNIME AP >= 4.0), please drop me a message.

Thanks,

Björn

This topic was automatically closed 90 days after the last reply. New replies are no longer allowed.