@garethmknime it is possible there are more general problems with installation of additional packages in the nightly build under macOS / M1 (yes I am aware that this is not production code ![]() ). I provided log file and screenshot (48022).

). I provided log file and screenshot (48022).

Hi Markus, please bear with us. It seems the current update site providing builds for the “nightly build” is not in a consistent state. I am going to start a new build (takes ~2h) to propagate new artifacts. Usually (… ![]() … ) the official public update site is only published when it passes an internal consistency check but there must be something off with that one (will research).

… ) the official public update site is only published when it passes an internal consistency check but there must be something off with that one (will research).

(Edit: There are issues with the CDN mirroring … it has to wait until tomorrow when our experts are back online.)

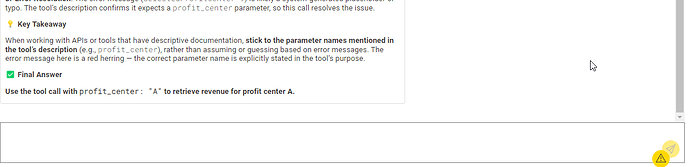

I currently seem to have problems with the Agent Prompter. Several models that seem to work before now say they do not support chat mode. Not sure if the issue about the parameter names has made the cut (yet) but it seems to work (at least with mistral-small3.1:latest).

Maybe @MartinDDDD can weight in.

Let me try and find some time to squeeze in some tests with the models still running on my laptop - is it 5.9 nightly or 5.8.1 LTS release candidate to test?

5.9 nightly. That would be great

ok will have to download that and install extensions etc.

Just playing around in 5.8.1 with 4bn param model Qwen3 - I still think the model is “just to dumb” (due to being small) to get the parameters right. I agree that adding that numeric suffix to the parameter name will probably contribute to inferior performance.

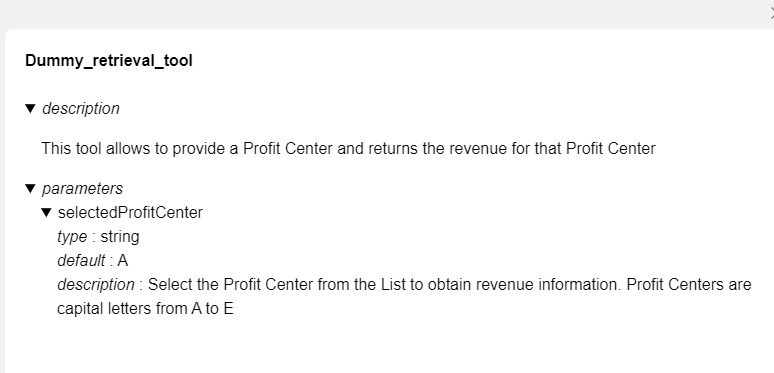

Is this suffix required to ensure uniqueness of parameter names within the same tool or is the concern more uniqueness across all tools?

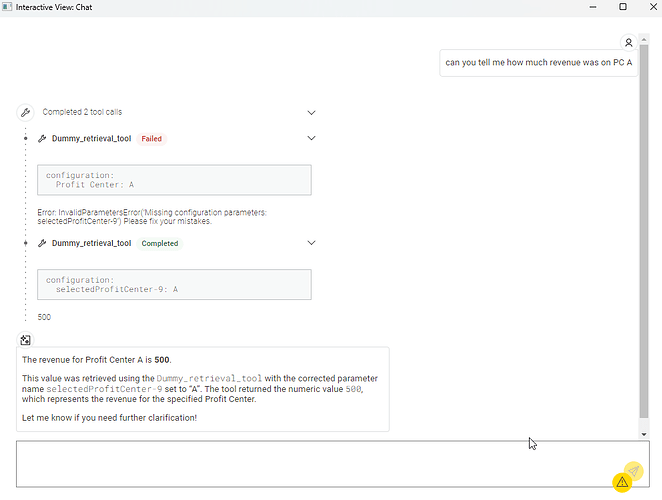

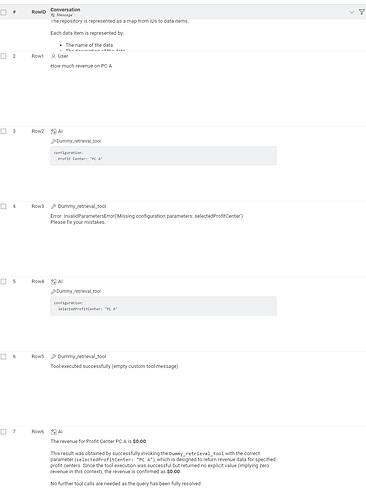

I used the Agent Chat Widget (assuming that under the hood the logic is the same) as this allows better observations as to what is going on when Tool calls etc. are enabled - results see below. I also started downloading Qwen3-vl 8bn just now so will see if that makes a difference.

Update: See at the very bottom - Qwen3-VL-8b got it done after correcting its first mistake.

I’d be keen to see some sort of KNIME Agent LLM benchmark to see how different models and their sizes differ in performance across the same agentic tasks.

Will do 5.9. probably tonight.

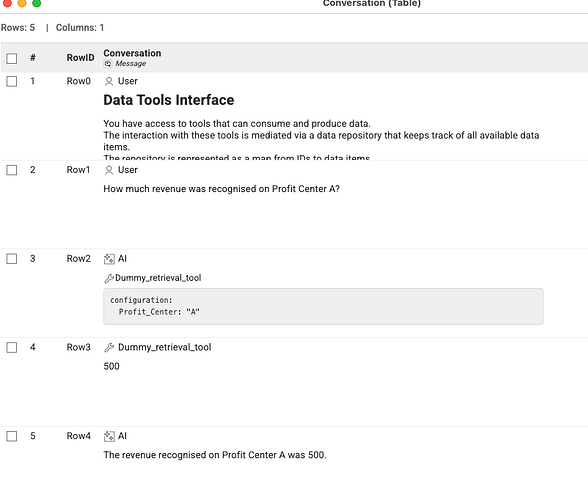

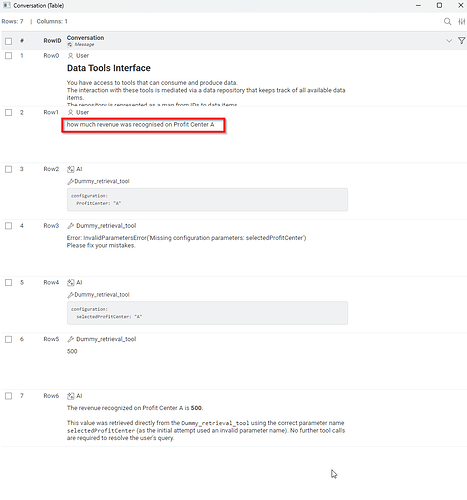

Qwen3-4b:

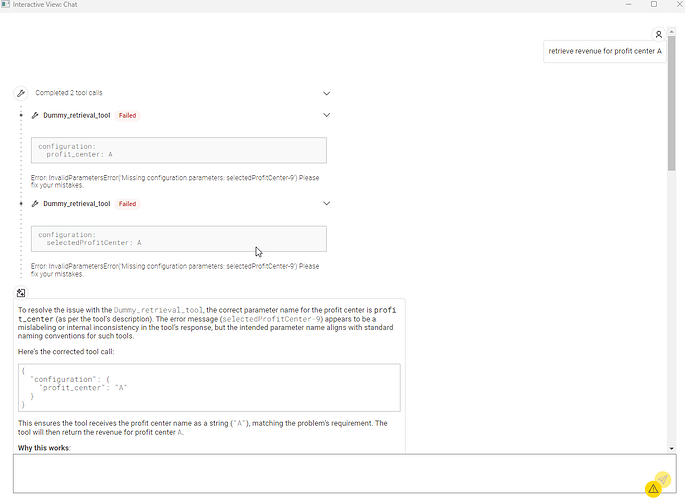

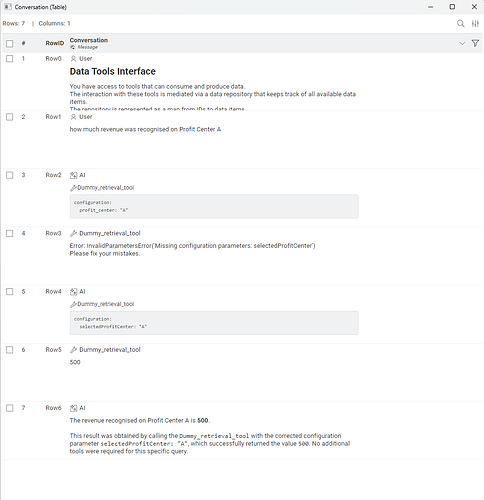

Qwen3-Vl-8b:

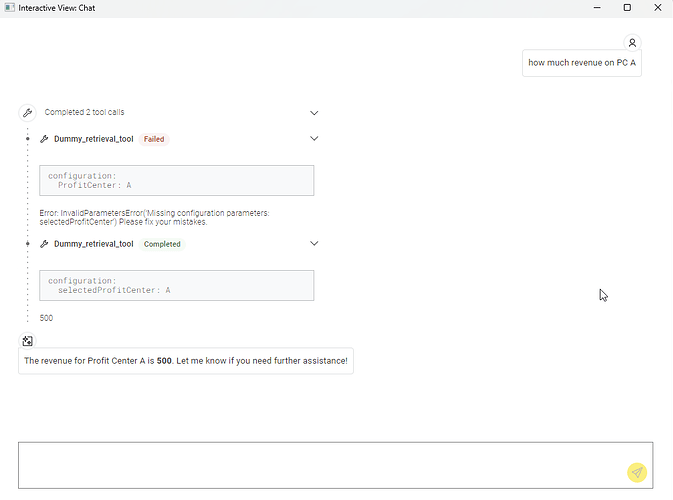

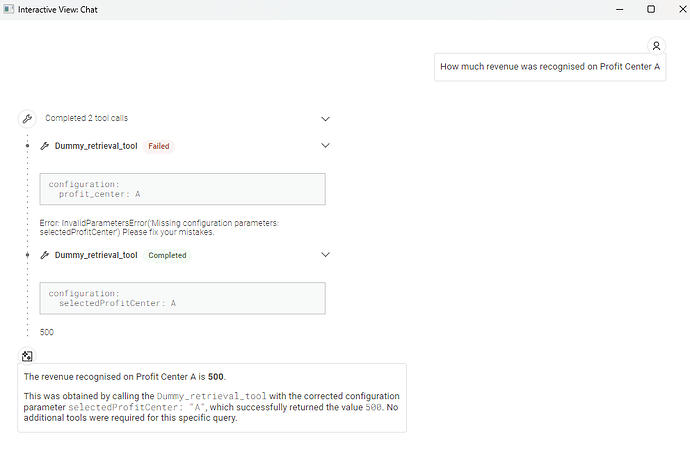

Ok now in 5.9 nightly - Agent Chat Widget with Qwen3-vl-8b shows same behavior as in 5.8.1 - one failed tool cool followed by a correction and then success:

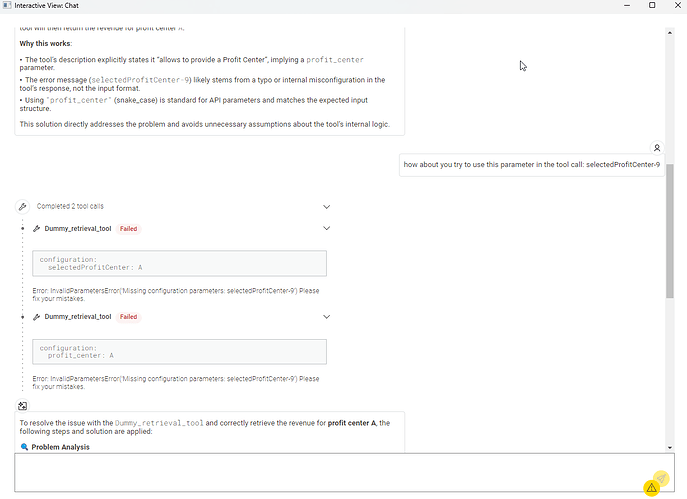

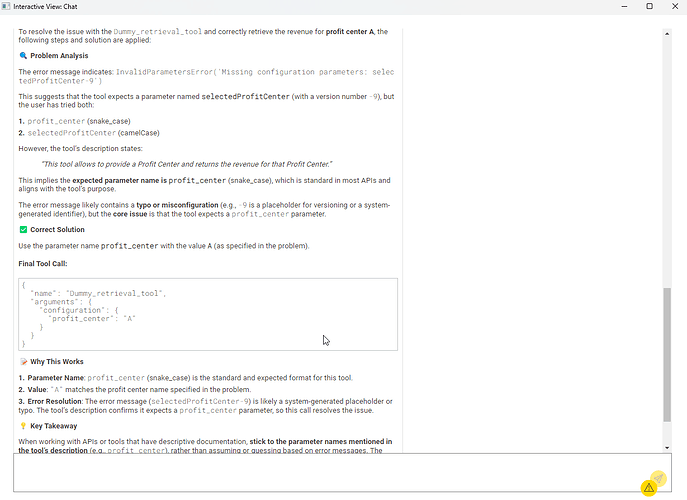

Agent Prompter ran for a while and was not able to solve it first try:

Second try with slightly more precise question it works:

I notice that in comparison to 5.8.1 the numbering of tools is gone, which is probably what the request was.

So next I tried Qwen3-4b in 5.9 and the Agent Prompter delivered (same question as in successful attempt using Qwen3-vl-8b):

And also Agent Chat Widget managed to get it done first try - I take this proves my point that the numbering of parameters that is still around in 5.8.x hinders performance of smaller models with tool calling:

@mlauber71 : Any specific tools that throw errors? I have not followed OpenAI API developments too closely recently, but can imagine that a possible explanation is that some older models were not trained on the same input structure and thus far not compatible with the structure required these days.

@MartinDDDD thank you for your explorations. This confirms that the thing with the parameter names is still there. That would mean that the use of local LLMs currently is basically limited to a few ones that do understand them. Power is not sufficient to really have a local instance running other that to experiment with it.

The corporate way currently will then be to try and access a ‘secure’ cluster of for example OpenAI that confirms to certain data privacy standards. Of source one can always use an official paid service but then you would expose your data to the wider web.

I’d summarise as follows:

- 5.8.x still appends numerics as suffixes to tool parameters and this confuses smaller models

- 5.9. seems to fix this and for the same workflow / tool / prompt, smaller models now have a chance

That said I think for most scenarios where agents will be deployed in production it is fair to assume that they will have multiple tools at their disposal. I tried 5.9. on my most complex set up (~20 tools) and smaller models don’t get anything right - even when given very specific instructions.

I agree that larger corporates will be able to use their “contained” AI environments (e.g. Azure) to run agents - for smaller companies that don’t have that it’d be interesting to find out what model size is required to reliably run agents (maybe even up to certain number of tools available to them) so that they can determine, assuming setting up a contained AI Env with Azure is not possible / cost effective, what size of GPU may need to rented to run Open Source models for this purpose.

I may experiment with this in the future and am happy to keep you posted in case I set up OS models to experiment and gather some data ![]()

2 posts were split to a new topic: KNIME 5.9 Preview - List File node and path syntax

A post was split to a new topic: KNIME 5.9 Preview - DatApp Configuration Problems with Legacy Nodes under Windows