Hi all,

We’re migrating to Knime Business Hub and having problem with reading/writing big file.

We have in fact big csv files (> 2 Gb) that we need to read or write.

In knime server, the file reader / csv writer worked well but in Hub version, we got timeout error.

I tried with custom path to increase the timeout in the node config options, but this time having a 500 instead.

Any idea how to make this work in Hub please ? We need to change some configuration ?

Thanks in advanced,

Thanh Thanh

@tttpham have you considered doing it in chunks. Knime should be able to handle CSV (or better parquet) files in chunks.

Also: what is the error message? Can you check if the file system on the new machine does support large files.

Hi @mlauber71,

Thanks for the reply.

-

In fact, we can do writing/reading the big file in chunk in some cases but in some particular cases, we need to provide these big files for our users. We can not tell them to get small files and do the concatenation themselves @@ …

-

For the CSV writer with relative path, we got the following error :

2024-04-02 07:55:53,660 : ERROR : KNIME-Worker-174-CSV Writer 5:714 : [e897591a-701c-4714-9738-5f8073c4b4a0:01|f5f77d2b-42ed-4179-9b83-de5f296bdc07] : Node : 5:714 : Execute failed: Read timed out

java.net.SocketTimeoutException: Read timed out

at java.base/sun.nio.ch.NioSocketImpl.timedRead(Unknown Source)

at java.base/sun.nio.ch.NioSocketImpl.implRead(Unknown Source)

at java.base/sun.nio.ch.NioSocketImpl.read(Unknown Source)

at java.base/sun.nio.ch.NioSocketImpl$1.read(Unknown Source)

at java.base/java.net.Socket$SocketInputStream.read(Unknown Source)

at java.base/java.io.BufferedInputStream.fill(Unknown Source)

at java.base/java.io.BufferedInputStream.read1(Unknown Source)

at java.base/java.io.BufferedInputStream.read(Unknown Source)

at java.base/sun.net.www.http.HttpClient.parseHTTPHeader(Unknown Source)

at java.base/sun.net.www.http.HttpClient.parseHTTP(Unknown Source)

at java.base/sun.net.www.protocol.http.HttpURLConnection.getInputStream0(Unknown Source)

at java.base/sun.net.www.protocol.http.HttpURLConnection.getInputStream(Unknown Source)

at java.base/java.net.HttpURLConnection.getResponseCode(Unknown Source)

at com.knime.enterprise.client.rest.RestUploadStream.close(RestUploadStream.java:263)

at org.knime.filehandling.core.connections.FSOutputStream.close(FSOutputStream.java:104)

at org.knime.filehandling.core.connections.base.BaseFileSystemProvider$2.close(BaseFileSystemProvider.java:504)

at java.base/java.io.FilterOutputStream.close(Unknown Source)

at java.base/sun.nio.cs.StreamEncoder.implClose(Unknown Source)

at java.base/sun.nio.cs.StreamEncoder.close(Unknown Source)

at java.base/java.io.OutputStreamWriter.close(Unknown Source)

at org.knime.base.node.io.filehandling.csv.writer.CSVWriter2.close(CSVWriter2.java:329)

at org.knime.base.node.io.filehandling.csv.writer.CSVWriter2NodeModel.writeToFile(CSVWriter2NodeModel.java:189)

at org.knime.base.node.io.filehandling.csv.writer.CSVWriter2NodeModel.execute(CSVWriter2NodeModel.java:147)

at org.knime.base.node.io.filehandling.csv.writer.CSVWriter2NodeModel.execute(CSVWriter2NodeModel.java:1)

at org.knime.core.node.NodeModel.executeModel(NodeModel.java:588)

at org.knime.core.node.Node.invokeFullyNodeModelExecute(Node.java:1297)

at org.knime.core.node.Node.execute(Node.java:1059)

at org.knime.core.node.workflow.NativeNodeContainer.performExecuteNode(NativeNodeContainer.java:595)

at org.knime.core.node.exec.LocalNodeExecutionJob.mainExecute(LocalNodeExecutionJob.java:98)

at org.knime.core.node.workflow.NodeExecutionJob.internalRun(NodeExecutionJob.java:201)

at org.knime.core.node.workflow.NodeExecutionJob.run(NodeExecutionJob.java:117)

at org.knime.core.util.ThreadUtils$RunnableWithContextImpl.runWithContext(ThreadUtils.java:367)

at org.knime.core.util.ThreadUtils$RunnableWithContext.run(ThreadUtils.java:221)

at java.base/java.util.concurrent.Executors$RunnableAdapter.call(Unknown Source)

at java.base/java.util.concurrent.FutureTask.run(Unknown Source)

at org.knime.core.util.ThreadPool$MyFuture.run(ThreadPool.java:123)

at org.knime.core.util.ThreadPool$Worker.run(ThreadPool.java:246)

For the CSV writer with custom path and timeout=10 000, we got :

2024-04-02 07:46:25,703 : ERROR : KNIME-Worker-163-CSV Writer 5:33 : [a884ddaf-8075-43b3-af20-b8d4e123d37e:01|f5f77d2b-42ed-4179-9b83-de5f296bdc07] : Node : 5:33 : Execute failed: Server returned error 500: Internal Server Error

java.io.IOException: Server returned error 500: Internal Server Error

at org.knime.core.util.HttpURLConnectionDecorator$OutputStreamDecorator.close(HttpURLConnectionDecorator.java:97)

at org.knime.core.util.WrappedURLOutputStream.close(WrappedURLOutputStream.java:125)

at org.knime.filehandling.core.connections.FSOutputStream.close(FSOutputStream.java:104)

at org.knime.filehandling.core.connections.base.BaseFileSystemProvider$2.close(BaseFileSystemProvider.java:504)

at java.base/java.io.FilterOutputStream.close(Unknown Source)

at java.base/sun.nio.cs.StreamEncoder.implClose(Unknown Source)

at java.base/sun.nio.cs.StreamEncoder.close(Unknown Source)

at java.base/java.io.OutputStreamWriter.close(Unknown Source)

at org.knime.base.node.io.filehandling.csv.writer.CSVWriter2.close(CSVWriter2.java:329)

at org.knime.base.node.io.filehandling.csv.writer.CSVWriter2NodeModel.writeToFile(CSVWriter2NodeModel.java:189)

at org.knime.base.node.io.filehandling.csv.writer.CSVWriter2NodeModel.execute(CSVWriter2NodeModel.java:147)

at org.knime.base.node.io.filehandling.csv.writer.CSVWriter2NodeModel.execute(CSVWriter2NodeModel.java:1)

at org.knime.core.node.NodeModel.executeModel(NodeModel.java:588)

at org.knime.core.node.Node.invokeFullyNodeModelExecute(Node.java:1297)

at org.knime.core.node.Node.execute(Node.java:1059)

at org.knime.core.node.workflow.NativeNodeContainer.performExecuteNode(NativeNodeContainer.java:595)

at org.knime.core.node.exec.LocalNodeExecutionJob.mainExecute(LocalNodeExecutionJob.java:98)

at org.knime.core.node.workflow.NodeExecutionJob.internalRun(NodeExecutionJob.java:201)

at org.knime.core.node.workflow.NodeExecutionJob.run(NodeExecutionJob.java:117)

at org.knime.core.util.ThreadUtils$RunnableWithContextImpl.runWithContext(ThreadUtils.java:367)

at org.knime.core.util.ThreadUtils$RunnableWithContext.run(ThreadUtils.java:221)

at java.base/java.util.concurrent.Executors$RunnableAdapter.call(Unknown Source)

at java.base/java.util.concurrent.FutureTask.run(Unknown Source)

at org.knime.core.util.ThreadPool$MyFuture.run(ThreadPool.java:123)

at org.knime.core.util.ThreadPool$Worker.run(ThreadPool.java:246)

- And what do you mean with

Can you check if the file system on the new machine does support large files.

?

How can I check it pls ?

Thanks in advanced,

Thanh Thanh

@tttpham maybe you can check out how to handle large CSV or parquet files in chunks. They will later then be one big file again (or at least work as one).

Check the parts “Collect results step by step” / “Several Parquet files into one”:

Some file systems have limitations how large a single file can be. Question is what kind of system your data will be stored on.

@mlauber71 , thanks for your explanation.

For the system, we have the knime hub installed on Ubuntu vms and no restriction on file size ( VM side).

I did not know about the Parquet Reader/Writer. It’s quite interesting to know 'bout it! Thanks !

However, when I tried using the Parquet Writer, I got the “same” read time out error, as following :

2024-04-02 10:56:51,396 : DEBUG : KNIME-Worker-677-Parquet Writer 9:714 : [|07908f00-0fcd-4d02-9fb2-0cfaf258d25e] : NodeContainer : 9:714 : Parquet Writer 9:714 has new state: PREEXECUTE

2024-04-02 10:56:51,396 : DEBUG : KNIME-Worker-677-Parquet Writer 9:714 : [|07908f00-0fcd-4d02-9fb2-0cfaf258d25e] : WorkflowDataRepository : 9:714 : Adding handler c8f379a6-071d-443b-97d8-4594814801c9 (Parquet Writer 9:714: <no directory>) - 9 in total

2024-04-02 10:56:51,396 : DEBUG : KNIME-Worker-677-Parquet Writer 9:714 : [|07908f00-0fcd-4d02-9fb2-0cfaf258d25e] : WorkflowManager : 9:714 : Parquet Writer 9:714 doBeforeExecution

2024-04-02 10:56:51,396 : DEBUG : KNIME-Worker-677-Parquet Writer 9:714 : [|07908f00-0fcd-4d02-9fb2-0cfaf258d25e] : NodeContainer : 9:714 : Parquet Writer 9:714 has new state: EXECUTING

2024-04-02 10:56:51,396 : DEBUG : KNIME-Worker-677-Parquet Writer 9:714 : [|07908f00-0fcd-4d02-9fb2-0cfaf258d25e] : LocalNodeExecutionJob : 9:714 : Parquet Writer 9:714 Start execute

2024-04-02 10:58:34,318 : DEBUG : KNIME-Worker-677-Parquet Writer 9:714 : [|07908f00-0fcd-4d02-9fb2-0cfaf258d25e] : Node : 9:714 : reset

2024-04-02 10:58:34,318 : ERROR : KNIME-Worker-677-Parquet Writer 9:714 : [|07908f00-0fcd-4d02-9fb2-0cfaf258d25e] : Node : 9:714 : Execute failed: Read timed out

java.net.SocketTimeoutException: Read timed out

at java.base/sun.nio.ch.NioSocketImpl.timedRead(Unknown Source)

at java.base/sun.nio.ch.NioSocketImpl.implRead(Unknown Source)

at java.base/sun.nio.ch.NioSocketImpl.read(Unknown Source)

at java.base/sun.nio.ch.NioSocketImpl$1.read(Unknown Source)

at java.base/java.net.Socket$SocketInputStream.read(Unknown Source)

at java.base/java.io.BufferedInputStream.fill(Unknown Source)

at java.base/java.io.BufferedInputStream.read1(Unknown Source)

at java.base/java.io.BufferedInputStream.read(Unknown Source)

at java.base/sun.net.www.http.HttpClient.parseHTTPHeader(Unknown Source)

at java.base/sun.net.www.http.HttpClient.parseHTTP(Unknown Source)

at java.base/sun.net.www.protocol.http.HttpURLConnection.getInputStream0(Unknown Source)

at java.base/sun.net.www.protocol.http.HttpURLConnection.getInputStream(Unknown Source)

at java.base/java.net.HttpURLConnection.getResponseCode(Unknown Source)

at com.knime.enterprise.client.rest.RestUploadStream.close(RestUploadStream.java:263)

at org.knime.filehandling.core.connections.FSOutputStream.close(FSOutputStream.java:104)

at org.knime.filehandling.core.connections.base.BaseFileSystemProvider$2.close(BaseFileSystemProvider.java:504)

at java.base/java.io.FilterOutputStream.close(Unknown Source)

at org.apache.hadoop.fs.FSDataOutputStream$PositionCache.close(FSDataOutputStream.java:72)

at org.apache.hadoop.fs.FSDataOutputStream.close(FSDataOutputStream.java:106)

at org.apache.parquet.hadoop.util.HadoopPositionOutputStream.close(HadoopPositionOutputStream.java:64)

at org.apache.parquet.hadoop.ParquetFileWriter.end(ParquetFileWriter.java:1106)

at org.apache.parquet.hadoop.InternalParquetRecordWriter.close(InternalParquetRecordWriter.java:132)

at org.apache.parquet.hadoop.ParquetWriter.close(ParquetWriter.java:319)

at org.knime.bigdata.fileformats.parquet.ParquetFileFormatWriter.close(ParquetFileFormatWriter.java:141)

at org.knime.bigdata.fileformats.node.writer2.FileFormatWriter2NodeModel.writeToFile(FileFormatWriter2NodeModel.java:267)

at org.knime.bigdata.fileformats.node.writer2.FileFormatWriter2NodeModel.write(FileFormatWriter2NodeModel.java:214)

at org.knime.bigdata.fileformats.node.writer2.FileFormatWriter2NodeModel.execute(FileFormatWriter2NodeModel.java:185)

at org.knime.bigdata.fileformats.node.writer2.FileFormatWriter2NodeModel.execute(FileFormatWriter2NodeModel.java:1)

at org.knime.core.node.NodeModel.executeModel(NodeModel.java:588)

at org.knime.core.node.Node.invokeFullyNodeModelExecute(Node.java:1297)

at org.knime.core.node.Node.execute(Node.java:1059)

at org.knime.core.node.workflow.NativeNodeContainer.performExecuteNode(NativeNodeContainer.java:595)

at org.knime.core.node.exec.LocalNodeExecutionJob.mainExecute(LocalNodeExecutionJob.java:98)

at org.knime.core.node.workflow.NodeExecutionJob.internalRun(NodeExecutionJob.java:201)

at org.knime.core.node.workflow.NodeExecutionJob.run(NodeExecutionJob.java:117)

at org.knime.core.util.ThreadUtils$RunnableWithContextImpl.runWithContext(ThreadUtils.java:367)

at org.knime.core.util.ThreadUtils$RunnableWithContext.run(ThreadUtils.java:221)

at java.base/java.util.concurrent.Executors$RunnableAdapter.call(Unknown Source)

at java.base/java.util.concurrent.FutureTask.run(Unknown Source)

at org.knime.core.util.ThreadPool$MyFuture.run(ThreadPool.java:123)

at org.knime.core.util.ThreadPool$Worker.run(ThreadPool.java:246)

Any idea to overcome this timeout ? Any configuration comes in mind, plz ?

Many thanks !!!

Thanh Thanh

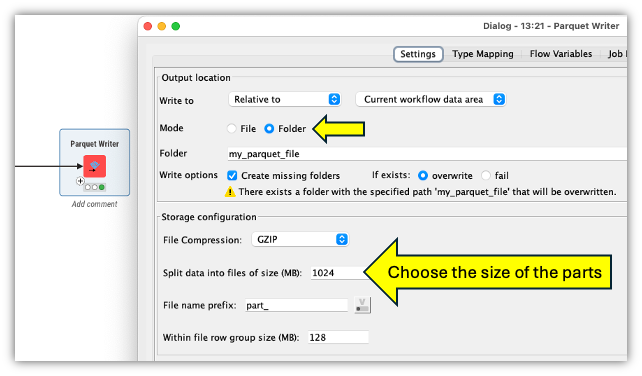

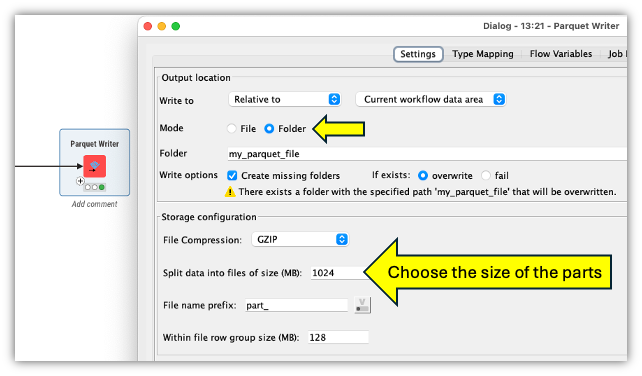

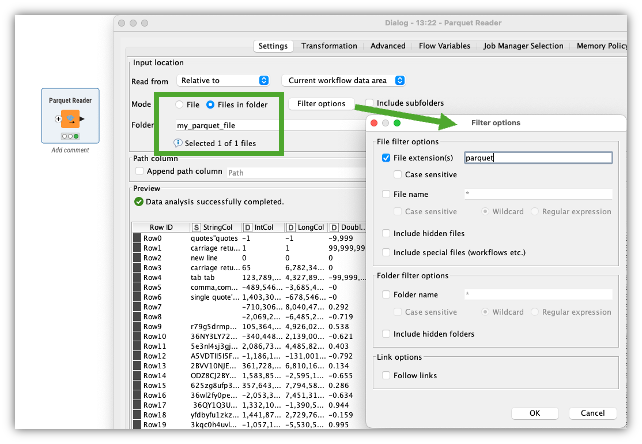

@tttpham the idea is to write the large file out in several parquet files that you can then upload individually to the new destination

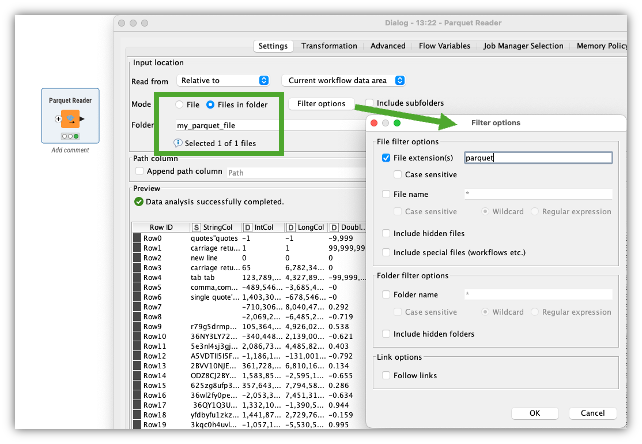

There a Parquet reader will bring the data back into KNIME and handle them as a single file:

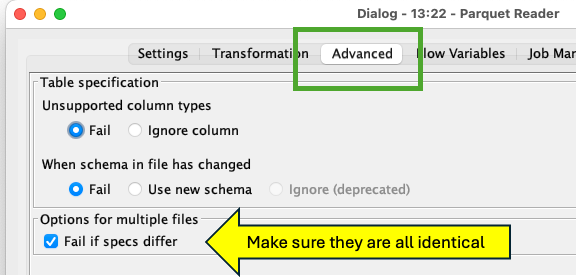

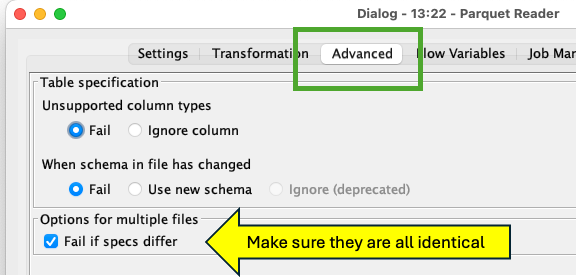

You can also make sure they have the same structure: