Hey there,

there is an error occurring while running the BERT Predictor - everytime at about 72 %. Before the BERT Classification Learner runs perfectly (about 36 hrs), even that the system it is working on is macOS - M2 (normally Tensorflow2 is not working on macOS but we only copied an example workflow and it functions). Knime Version is 4.7.1.

The text in the log file says:

2023-03-29 09:29:31,211 : ERROR : KNIME-Worker-66-BERT Predictor 6:11 : : Node : BERT Predictor : 6:11 : Execute failed: An exception occured while running the Python kernel. See log for details.

org.knime.python2.kernel.PythonIOException: An exception occured while running the Python kernel. See log for details.

at org.knime.python3.scripting.Python3KernelBackend.getDataTable(Python3KernelBackend.java:468)

at org.knime.python2.kernel.PythonKernel.getDataTable(PythonKernel.java:326)

at se.redfield.bert.core.BertCommands.getDataTable(BertCommands.java:121)

at se.redfield.bert.core.BertCommands.getDataTable(BertCommands.java:115)

at se.redfield.bert.nodes.predictor.BertPredictorNodeModel.runPredict(BertPredictorNodeModel.java:94)

at se.redfield.bert.nodes.predictor.BertPredictorNodeModel.execute(BertPredictorNodeModel.java:76)

at org.knime.core.node.NodeModel.executeModel(NodeModel.java:549)

at org.knime.core.node.Node.invokeFullyNodeModelExecute(Node.java:1267)

at org.knime.core.node.Node.execute(Node.java:1041)

at

org.knime.core.node.workflow.NativeNodeContainer.performExecuteNode(NativeNodeContainer.java:595)

at org.knime.core.node.exec.LocalNodeExecutionJob.mainExecute(LocalNodeExecutionJob.java:98)

at org.knime.core.node.workflow.NodeExecutionJob.internalRun(NodeExecutionJob.java:201)

at org.knime.core.node.workflow.NodeExecutionJob.run(NodeExecutionJob.java:117)

at org.knime.core.util.ThreadUtils$RunnableWithContextImpl.runWithContext(ThreadUtils.java:367)

at org.knime.core.util.ThreadUtils$RunnableWithContext.run(ThreadUtils.java:221)

at java.base/java.util.concurrent.Executors$RunnableAdapter.call(Unknown Source)

at java.base/java.util.concurrent.FutureTask.run(Unknown Source)

at org.knime.core.util.ThreadPool$MyFuture.run(ThreadPool.java:123)

at org.knime.core.util.ThreadPool$Worker.run(ThreadPool.java:246)

Caused by: java.io.IOException: Row key checking: Error when processing batch

at org.knime.python3.arrow.SinkManager.checkRowKeys(SinkManager.java:136)

at org.knime.python3.arrow.SinkManager.convertToTable(SinkManager.java:121)

at org.knime.python3.scripting.Python3KernelBackend.lambda$3(Python3KernelBackend.java:465)

at org.knime.core.util.ThreadUtils$CallableWithContextImpl.callWithContext(ThreadUtils.java:383)

at org.knime.core.util.ThreadUtils$CallableWithContext.call(ThreadUtils.java:269)

at java.base/java.util.concurrent.FutureTask.run(Unknown Source)

at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(Unknown Source)

at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(Unknown Source)

at java.base/java.lang.Thread.run(Unknown Source)

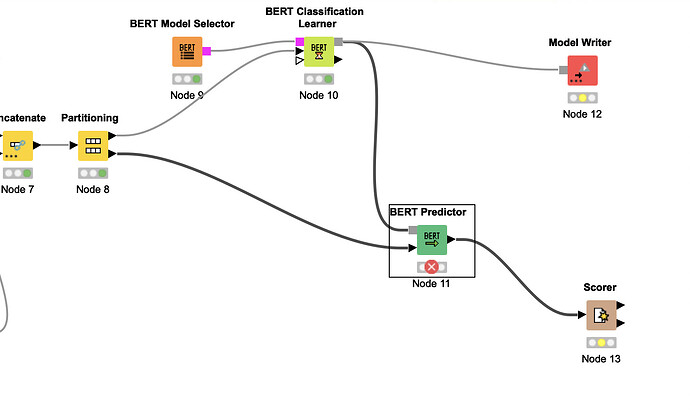

Here also a screenshot of a part of the workflow.

Sample data is from Misinformation & Fake News text dataset 79k | Kaggle → only DataSet_Misinfo_TRUE.csv and EXTRA_RussianPropagandaSubset.csv are used

May anybody help to make the BERT Predictor run, please? Would be very thankful for help.