ok sorry about that - based on the code I thought it might not be a problem for you to revert my changes that I made to allow me to execute…

Here’s your original code with the if name = main removed…

If that doesn’t do it them I’m afraid I’m out of ideas

import knime.scripting.io as knio

import pandas as pd

import requests

# Set your API key here

API_KEY = 'KEY'

VISION_API_URL = f'https://vision.googleapis.com/v1/images:annotate?key={API_KEY}'

# Analyze image with Google Vision API

def analyze_image(image_url):

request_payload = {

"requests": [

{

"image": {

"source": {"imageUri": image_url}

},

"features": [

{"type": "LABEL_DETECTION"},

{"type": "OBJECT_LOCALIZATION"},

{"type": "FACE_DETECTION"},

{"type": "LANDMARK_DETECTION"},

{"type": "SAFE_SEARCH_DETECTION"},

{"type": "IMAGE_PROPERTIES"}

]

}

]

}

response = requests.post(VISION_API_URL, json=request_payload)

if response.status_code == 200:

return response.json()

else:

print(f"Error: {response.status_code}, {response.text}")

return None

def process_images(image_urls):

results = []

for url in image_urls:

print(f"Processing {url}...")

response = analyze_image(url)

if response and 'responses' in response:

analysis = response['responses'][0]

result = {

'ImageURL': url,

'Labels': ", ".join(label['description'] for label in analysis.get('labelAnnotations', [])),

'Objects': ", ".join(obj['name'] for obj in analysis.get('localizedObjectAnnotations', [])),

'Faces': len(analysis.get('faceAnnotations', [])),

'Landmarks': ", ".join(landmark['description'] for landmark in analysis.get('landmarkAnnotations', [])),

'SafeSearch_Adult': analysis.get('safeSearchAnnotation', {}).get('adult', 'UNKNOWN'),

'SafeSearch_Spoof': analysis.get('safeSearchAnnotation', {}).get('spoof', 'UNKNOWN'),

'SafeSearch_Medical': analysis.get('safeSearchAnnotation', {}).get('medical', 'UNKNOWN'),

'SafeSearch_Violence': analysis.get('safeSearchAnnotation', {}).get('violence', 'UNKNOWN'),

'SafeSearch_Racy': analysis.get('safeSearchAnnotation', {}).get('racy', 'UNKNOWN'),

'ImageProperties': ", ".join(

f"RGB({color['color']['red']},{color['color']['green']},{color['color']['blue']})"

for color in analysis.get('imagePropertiesAnnotation', {}).get('dominantColors', {}).get('colors', [])

)

}

results.append(result)

else:

print(f"No response for {url}")

results.append({

'ImageURL': url,

'Labels': '',

'Objects': '',

'Faces': 0,

'Landmarks': '',

'SafeSearch_Adult': 'ERROR',

'SafeSearch_Spoof': 'ERROR',

'SafeSearch_Medical': 'ERROR',

'SafeSearch_Violence': 'ERROR',

'SafeSearch_Racy': 'ERROR',

'ImageProperties': ''

})

return results

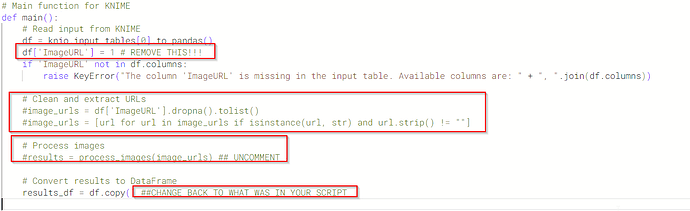

# Main function for KNIME

def main():

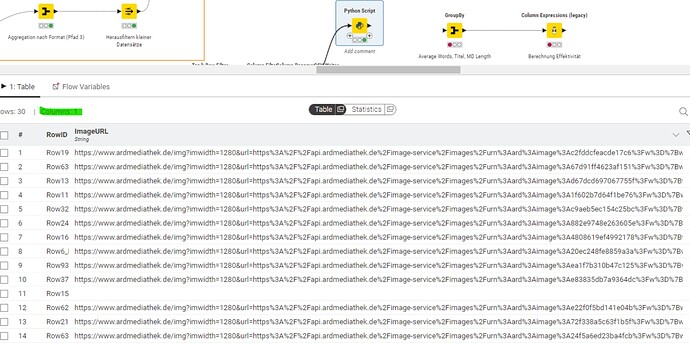

# Read input from KNIME

df = knio.input_tables[0].to_pandas()

if 'ImageURL' not in df.columns:

raise KeyError("The column 'ImageURL' is missing in the input table. Available columns are: " + ", ".join(df.columns))

# Clean and extract URLs

image_urls = df['ImageURL'].dropna().tolist()

image_urls = [url for url in image_urls if isinstance(url, str) and url.strip() != ""]

# Process images

results = process_images(image_urls)

# Convert results to DataFrame

results_df = pd.DataFrame(results)

# Ensure the output table is populated even if no results are available

if results_df.empty:

results_df = pd.DataFrame(columns=[

'ImageURL', 'Labels', 'Objects', 'Faces', 'Landmarks',

'SafeSearch_Adult', 'SafeSearch_Spoof', 'SafeSearch_Medical',

'SafeSearch_Violence', 'SafeSearch_Racy', 'ImageProperties'

])

# Output results to KNIME

knio.output_tables[0] = knio.Table.from_pandas(results_df)

# Execute the main function

main()

![]() KnimeUserError: Output table ‘0’ must be of type knime.api.Table or knime.api.BatchOutputTable, but got None. knio.output_tables[0] has not been populated.

KnimeUserError: Output table ‘0’ must be of type knime.api.Table or knime.api.BatchOutputTable, but got None. knio.output_tables[0] has not been populated.