Hello Everyone,

I am using the Azure OpenAI Authenticator and Azure OpenAI Chat Model Connector nodes. While some of my deployed models can be addressed successfully, I am facing problems in reaching GPT4-1106-preview, GPT4-0125-preview and sometimes GPT4-32k. I am in contact with the Microsoft support. They are asking me about the used SDK version and about in which way and details how the REST call is made. Who can help me with this?

Thank you in advance,

Armin

Hey @Armin_Alois,

I will get in touch with one of the SEs and ask for them to take a look at the issue you are encountering.

Thanks,

TL

Hello @Armin_Alois,

which version of the AI Extension are you using?

The latest released version is 5.2.1 and it uses the Python libraries langchain 0.0.339 and openai 1.2.4 to communicate with Azure.

Best regards,

Adrian

Hey @thor, hello @nemad,

thank you very much! I am using AI extension 5.2.1 and Knime 5.2.3. I forwarded the info to the OpenAI support engineers.

Best, Armin

Hello @thor, hello @nemad,

the issue is solved for Ada and GPT4-1106-preview both localized at the same site.

However it still persists for Embedding3small, Embedding3large and GPT4-0125-preview. FAISS vector store models can be created successfully by the Embedding3s without error message. Also GPT40125 is able to answer questions relating to its own knowledge successfully. Questions relating to Tool content (FAISS vector store model generated by Vector to Tool node) produce an error message in the Agent Prompter node (401: unauthorized access). Do you know if the Agent Prompter Node is able to deal with Embedding3 generated FAISS vector models? In Knime, do the Embedding model used for FAISS vector store creation and the GPT model for analyses be located at the same OpenAI location (i.e. same key)?

Looking forward to your replies.

Armin

I am not sure I fully understand your setup.

Do the embeddings and the gpt4 model both require the same or different API keys?

If they are different, is it possible that you use the same name for the credential flow variable in both?

Best regards

Adrian

Hi Adrian,

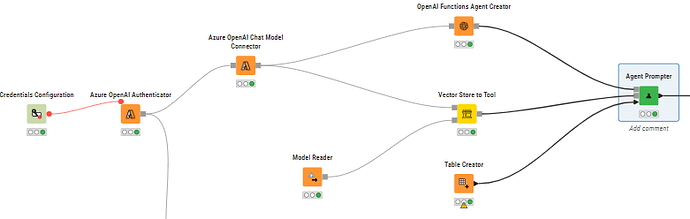

I am using two workflows. At the end of my first workflow, I create with the FAISS Vector Store Creator node vector store models which are saved via the Model Writer. In the second workflow (see pic), I import the vector store models with the Model Reader, convert them with the Vector to Tool node and subsequent analyse them by the Agent Prompter.

This setup is fine for Ada and GPT4-1106-preview both localized at the same site. It is now also working for Embedding-3-small/large and GPT4-0125-preview localized at the same site. So the answer to my first question (if the Agent Prompter is able to analyse Embeddings3s generated FAISS vector models) is yes.

I am still looking for an answer for the second question i.e. does Knime require that Embedding (Ada, Embedding3s) and analysing model (GPT4) are localized on the same OpenAI site i.e. one common endpoint key although both are separated in different workflows? I know this is not a requirement outside of Knime when a Python script is used. However, when I am using a FAISS VS model generated by an Embedding located at a different OpenAI site than the analysing GPT4 (Agent Prompter), I receive an output which is not based on the VS model (no error message).

Best, Armin

No, we do not require the models to be at the same site, however, we do require that the branch containing the Agent Prompter has all credentials needed by the model and vectorstore/embedding because we do not store any credentials inside the models for security reasons.

So to make this work you will have to also provide the credential flow variable holding the credentials for the embeddings model used in the vectorstore to the Agent Prompter, and its name will have to be a different one than the one you use for the chat model to avoid overwriting one credential with the other.

Best regards,

Adrian