Hi All,

I have 5.2 million rows in a table which i am trying to read and then finally bring it to one CSV.

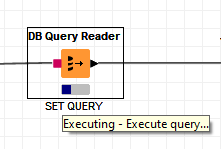

The DB reader has been going on for 20 minutes and it still doesn’t move ahead. What things i can do to fasten up the process?

Hi All,

I have 5.2 million rows in a table which i am trying to read and then finally bring it to one CSV.

The DB reader has been going on for 20 minutes and it still doesn’t move ahead. What things i can do to fasten up the process?

Hi @r_jain

That’s more a db management question than a KNIME question I’m afraid. Questions that come to mind:

From experience with large datasets that also join several tables I noticed that KNIME appears to be not moving, but it’s then “pre-reading” all the data from the different tables/establishing the joiners.

After that’s done, it actually starts to load all rows, which is indicatied by a row count if you hover over the Query Reader.

@r_jain depending on your database you could try and read the data in chunks and append it to a CSV file

Thanks,

I have a impala database.

Impala database

Fetching data from a view. It is 20 odd columns and there are no joins.

I am actually connecting through VPN.

I was thinking to rather host the workflow on the knime server and then try as that might speed up the process.

This usually did the trick when i was working with alteryx.

Thanks!

Running on the KNIME server definitely helps if it has more resources available than your own workstation (and the db itself is not the bottleneck).

I had the same issue with querying view’s in SAP, which where a living nightmare to use. Based on some documentation it appears Impala has a similar functionality. With a subject matter expert I eventually got the SAP source tables that were feeding the view and directly queried those. It worked like a charm afterwards.

If possible, I would try a similar exercise to circumnavigate the view.

What matters a lot is location of your computer making the call and the database. Or said otherwise latency.

In a LAN with latency of 0.01 ms, stuff runs really fast. go to the cloud with 100 ms latency and it will run 1000x times slower.

@r_jain with big data systems much might depend on the structure. You might want to learn about the partitions and maybe iterate over them and also make sure the statistics of the table are up to date.

Also if you can use filters best based on partitions you should use them.

This topic was automatically closed 90 days after the last reply. New replies are no longer allowed.