![]() New Wednesday, new Just KNIME It! challenge!

New Wednesday, new Just KNIME It! challenge! ![]()

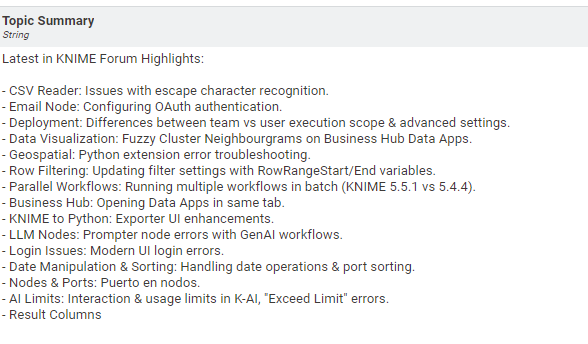

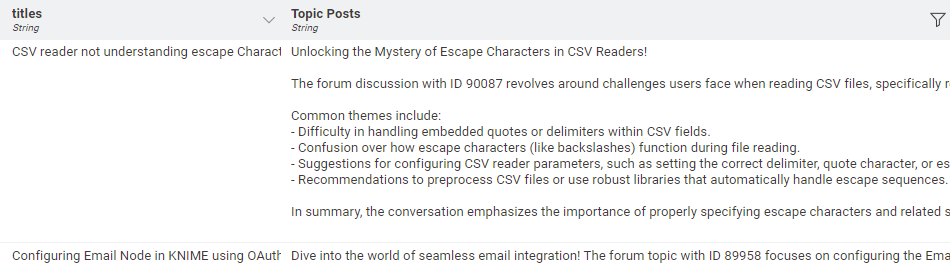

![]() The KNIME Forum contains thousands of topics on a variety of subjects, with interactions across many users. These features make it a great resource to experiment with text processing algorithms for natural language.

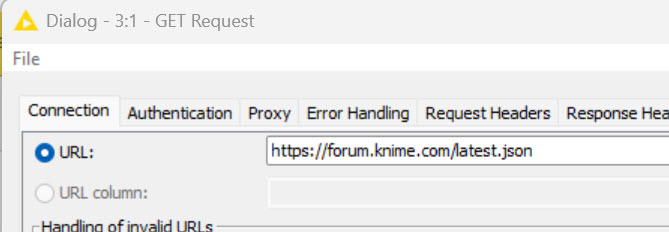

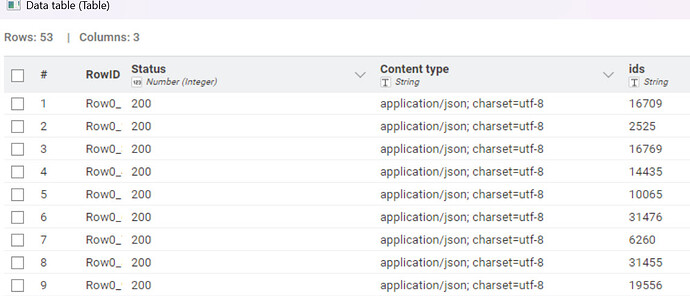

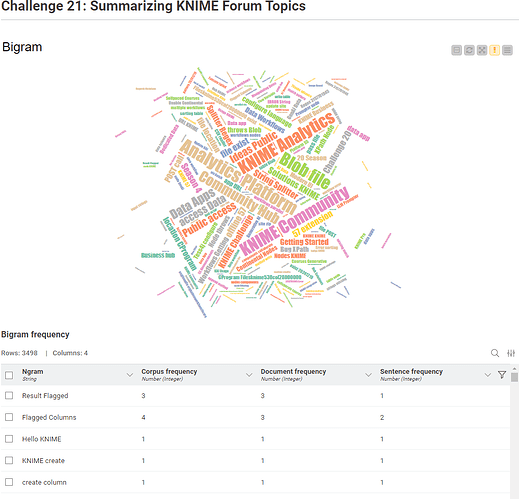

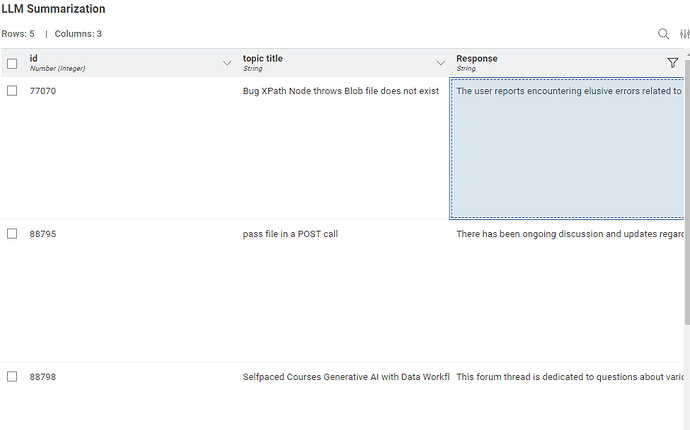

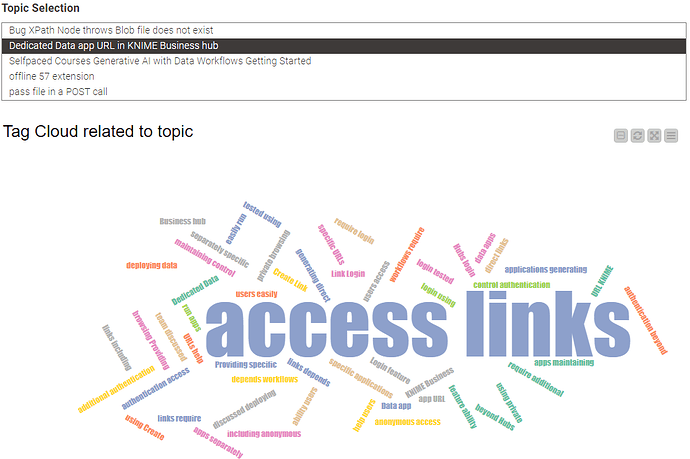

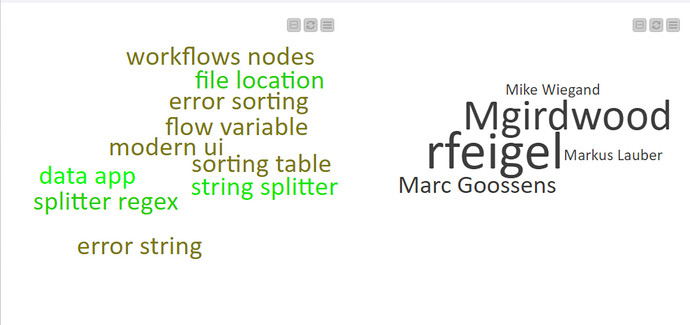

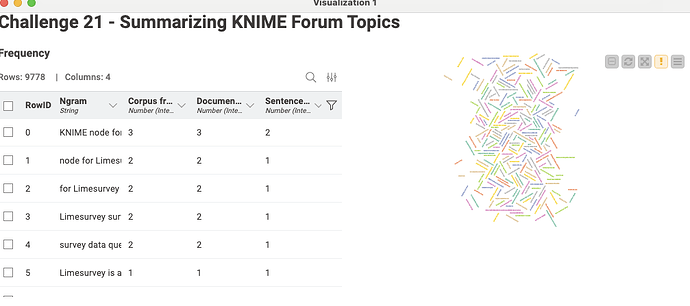

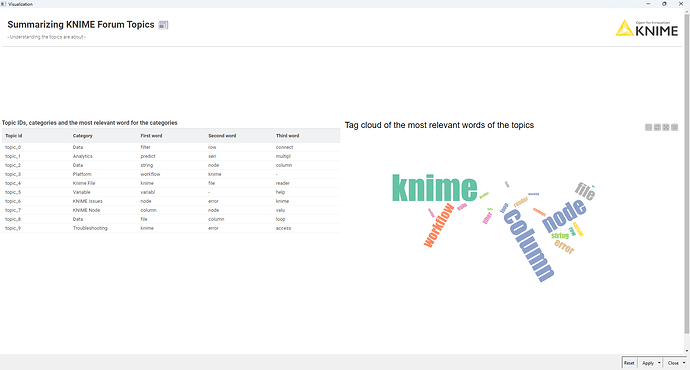

The KNIME Forum contains thousands of topics on a variety of subjects, with interactions across many users. These features make it a great resource to experiment with text processing algorithms for natural language. ![]() This week, you will use your API interaction skills to fetch the latest forum topics, and then experiment with different text summarization techniques to check what subjects are the most talked about in the forum currently. You can even go one step further and create visualizations for these summaries!

This week, you will use your API interaction skills to fetch the latest forum topics, and then experiment with different text summarization techniques to check what subjects are the most talked about in the forum currently. You can even go one step further and create visualizations for these summaries! ![]()

Here is the challenge. Let’s use this thread to post our solutions to it, which should be uploaded to your public KNIME Hub spaces with tag JKISeason4-21 .

![]() Need help with tags? To add tag JKISeason4-21 to your workflow, go to the description panel in KNIME Analytics Platform, click the pencil to edit it, and you will see the option for adding tags right there.

Need help with tags? To add tag JKISeason4-21 to your workflow, go to the description panel in KNIME Analytics Platform, click the pencil to edit it, and you will see the option for adding tags right there. ![]() Let us know if you have any problems!

Let us know if you have any problems!