Hi KNIMErs ![]()

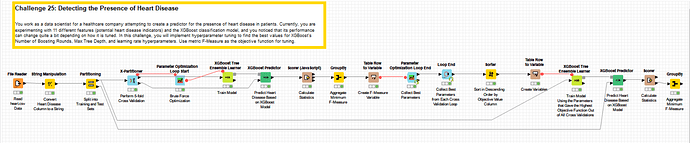

This week I have split the data into training (70%) and test (30%) sets and used the training set to perform a 5-fold cross validation using the -X-Partitioner- node. The -Parameter Optimization Loop- nodes have been used inside the cross validation loop to determine the optimal parameters (Number of Boosting Rounds, Max Tree Depth, and Learning Rate) for the -XGBoost Tree Ensemble Learner- node, with the Objective Function set as the F-Measure.

After obtaining the optimal parameters for each of the cross validations, the best set of parameters was selected to train a final XGBoost model on the full training set and the model was used to predict the test set.

The average F-Measure for the prediction on the test set was 0.87, using the following parameters:

Number of Boosting Rounds = 21

Max Tree Depth = 3

Learning Rate = 0.2

You can find my workflow on the hub here:

Thanks to @aworker for your guidance ![]()

Best wishes

Heather