The same workflows with exactly the same datas worked before and now give new executing errors using the “Create local big data environment node”. In each of these new failures you can find a similar log line :

: java.lang.IllegalAccessError: class org.apache.spark.sql.catalyst.util.SparkDateTimeUtils (in unnamed module @0x3ce2b664) cannot access class sun.util.calendar.ZoneInfo (in module java.base) because module java.base does not export sun.util.calendar to unnamed module @0x3ce2b664

log.txt (12.3 KB)

version 5.5

4.7.4

I really don’t understand this, it stops sometime with persist, between, collect but I can’t isolate it. It seems something in hidden layers is calling the class sun.util.calendar.ZoneInfo in an unappropriate way.

Later on the evening …

KNIME_project_forum.knwf (356.5 KB)

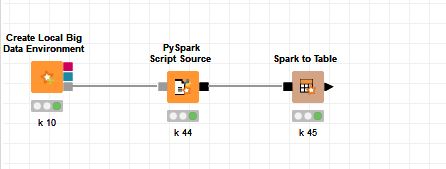

You can reproduce it with different versions with the workflow in attachment. The first part create a fake file. The second part call it in Pyspark source as a CSV and generate the error in new versions.