Hi,

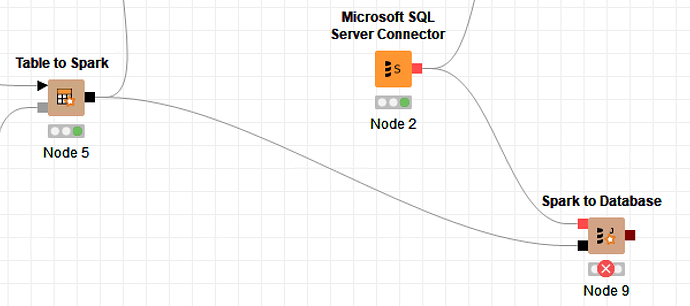

I’m trying to write from Spark to a MS SQL database (all in Azure):

I get the following error message from Node 9:

ERROR Spark to Database 0:9 Execute failed: Failed to load JDBC data: Job aborted due to stage failure: Task 0 in stage 3.0 failed 4 times, most recent failure: Lost task 0.3 in stage 3.0 (TID 30, wn2-knimes.p2kmzgwdndquxaqnytnjfesp1a.ax.internal.cloudapp.net, executor 1): java.sql.SQLException: Invalid state, the Connection object is closed.

- at net.sourceforge.jtds.jdbc.JtdsConnection.checkOpen(JtdsConnection.java:1744)*

- at net.sourceforge.jtds.jdbc.JtdsConnection.rollback(JtdsConnection.java:2164)*

- at org.apache.spark.sql.execution.datasources.jdbc.JdbcUtils$.savePartition(JdbcUtils.scala:695)*

- at org.apache.spark.sql.execution.datasources.jdbc.JdbcUtils$$anonfun$saveTable$1.apply(JdbcUtils.scala:821)*

- at org.apache.spark.sql.execution.datasources.jdbc.JdbcUtils$$anonfun$saveTable$1.apply(JdbcUtils.scala:821)*

- at org.apache.spark.rdd.RDD$$anonfun$foreachPartition$1$$anonfun$apply$29.apply(RDD.scala:935)*

- at org.apache.spark.rdd.RDD$$anonfun$foreachPartition$1$$anonfun$apply$29.apply(RDD.scala:935)*

- at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2074)*

- at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2074)*

- at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:87)*

- at org.apache.spark.scheduler.Task.run(Task.scala:109)*

- at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:345)*

- at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)*

- at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)*

- at java.lang.Thread.run(Thread.java:748)*

Driver stacktrace:

(The stack trace really ends here)

The option Upload local JDBC driver is selected.

Any idea what’s happening here?

br filum