com.knime.server.job.max_execution_time=<duration with unit, e.g. 60m, 36h, or 2d> [RT]

Allows to set a maximum execution time for jobs. If a job is executing longer than this value it will be canceled and eventually discarded (see com.knime.server.job.discard_after_timeout option). The default is unlimited job execution time. Note that for this setting to work, com.knime.server.job.swap_check_interval needs to be set a value lower than com.knime.server.job.max_execution_time.

The above server config is a thing that exists, but OP already mentioned this won’t work for them, as they have other workflows which do need to execute longer than that.

It’s just the one workflow that has time constraints.

com.knime.server.job.discard_after_timeout=<true|false> [RT]

Specifies whether jobs that exceeded the maximum execution time should be canceled and discarded (true) or only canceled (false). May be used in conjunction with com.knime.server.job.max_execution_time option. The default (true) is to discard those jobs.

This won’t help here; this only controls whether the execution files get discarded immediately or left in swap for the 7d default duration (com.knime.server.job.max_lifetime).

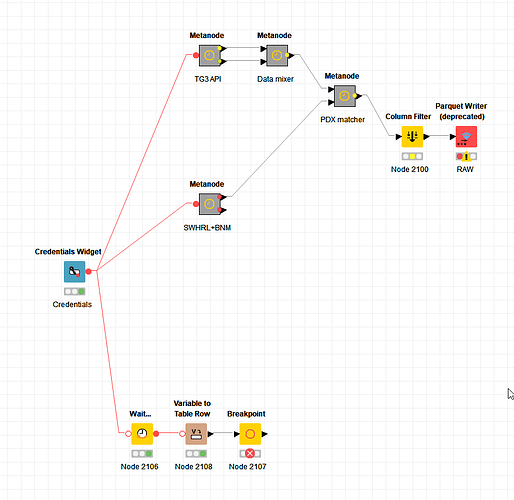

What I would probably do is this:

Get an idea of how long the actual execution time of the Special Job workflow takes.

You could utilize the Execution_time_metanode for this. If it is under 30 min, great.

This is to keep tabs on how long it’s actually running, in case data makes it increase, so you can take corrective measures before it exceeds the 30m threshold.

KNIME server feeds jobs to its executor(s) on a first-come, first-serve, up-to-capacity basis. So there’s no individual “kill this job if it runs longer than X min, but only this one job” options.

If you have jobs running hours, then the first thing I’d probably do is take a hard look at how much resources (core tokens and heap) the executor is getting. Heap to ensure it has the memory to handle all the jobs and data it’s being asked to, and cores/tokens to ensure it can crunch things fast enough to not make things take forever to finish.

But, assuming that just buffing your existing executor won’t quite cut it, another thing you can do is set up Distributed Executors [1] so that you have an executor basically dedicated to time-sensitive jobs like your Special Job.

Then, you use Workflow Pinning [2] to route those special jobs to your super-fast executor so that it’s not sitting in queue with slower traffic.

Then, as long as its data doesn’t grow too much and it stays under the 30m, you’ll be golden.

[1] KNIME Server Installation Guide

[2] KNIME Server Administration Guide