Hi,

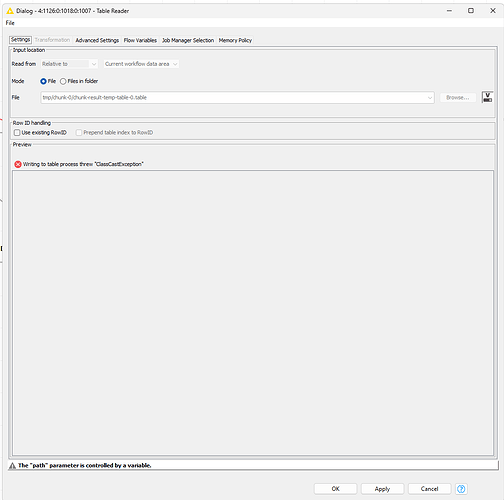

I once raised this bug but it got closed before feedback about the “ClassCastException” issue was given. I want to bring this to your attention again as, when this error occurs, which it did again unexpectedly now w/o any particular change made, the whole execution and results must get discarded.

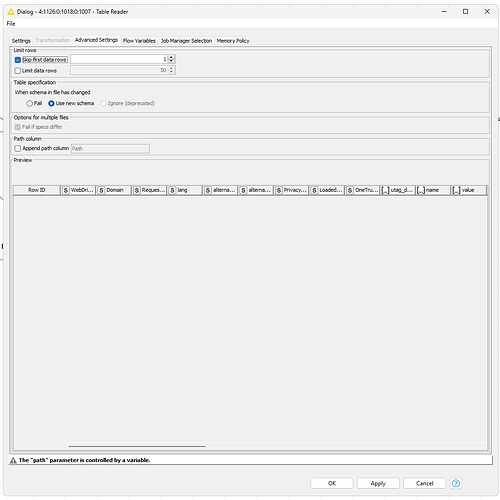

Though, I am able to limit the amount of rows read which indicates “garbage” data being written somewhere at the end, likely during the previous loop iteration, to the table.

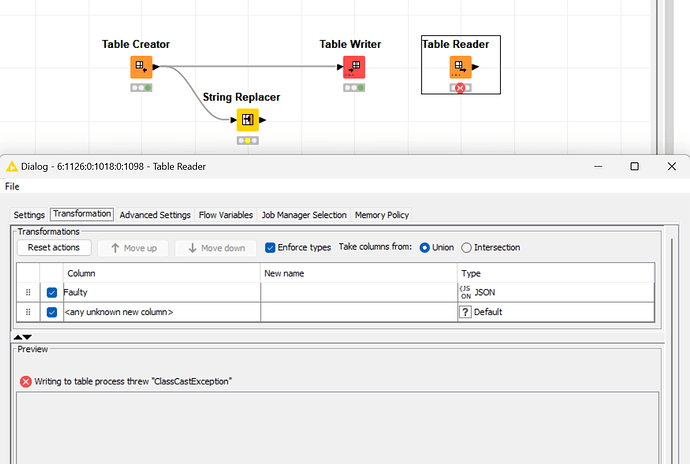

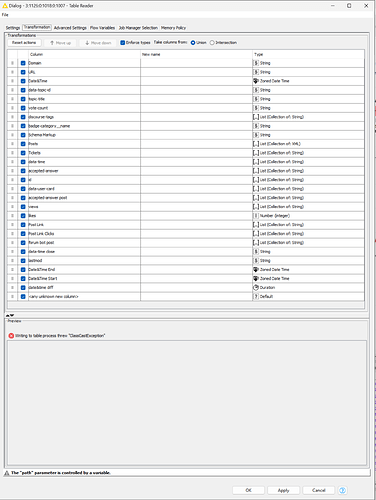

ERROR Table Reader 6:1126:0:1018:0:1007 Execute failed: Writing to table process threw "ClassCastException"

Attached the Thread Dump. I have backed up the table file but can only share it privately due to client data being present.

231010-knime-thread-dump-ClassCastException.txt (330.3 KB)

I can share the table files, as done before, via a private download link. Please reach out to me per DM or email as usual.

PS: I have already isolated the data which triggers the issue and can reproduce it. I am currently trying to create a workflow with anatomized data which I can share.

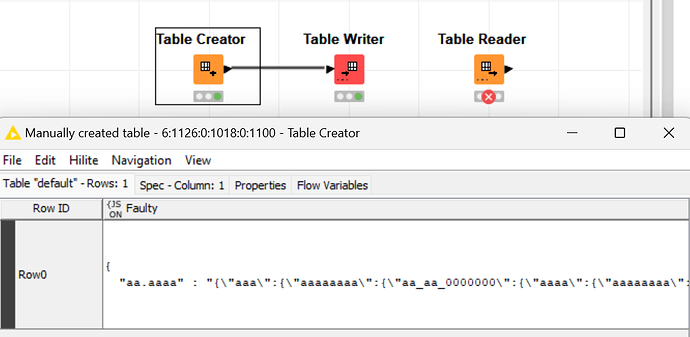

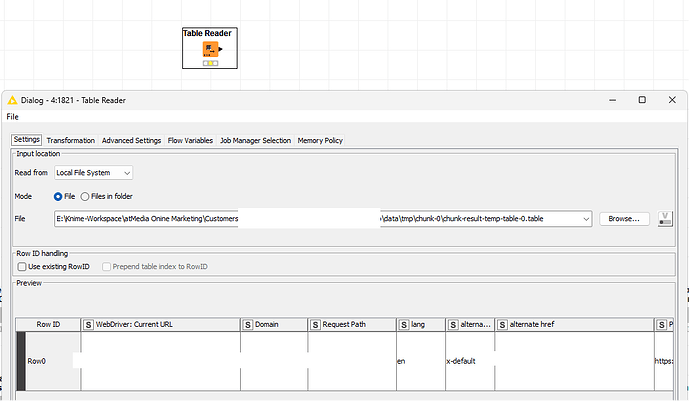

PPS: It is caused by the JSON data. Albeit it’s valid JSON data, verified by Knime converting it to JSON and an external JSON validator, there is partially escaped JSON in the form of a string in a JSON key.

test-json-ClassCastException.txt (27.9 KB)

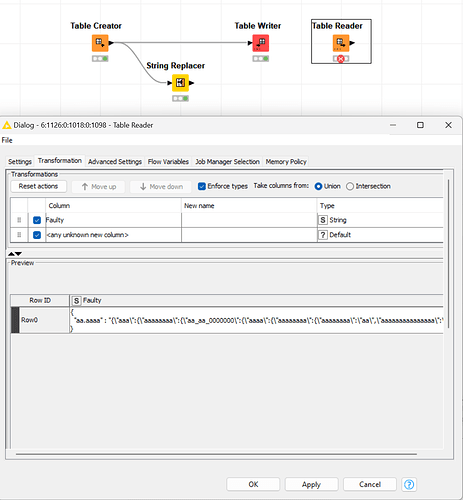

However, using that exact same setup in the test workflow I hereby share, it is NOT reproducible anymore. Applying the fallback from the other post linked at the top, replacing each character by itself, the table reader works (bcs it converts JSON to String). Reading the data also works, even when nothing got replaced, if the column type string is set.

Best

Mike