Hi @hechifa39 and welcome to the KNIME Forum!

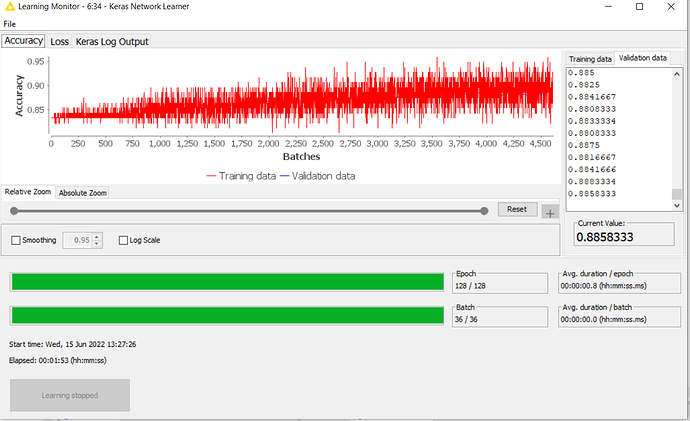

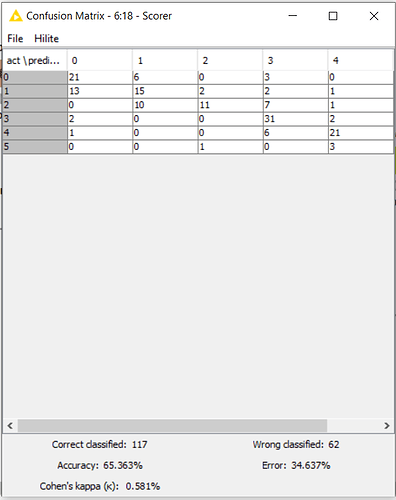

When training ML models, it’s often the case that the overall accuracy achieved on the test set is a bit lower than that achieved on the train set. If that discrepancy is fairly big, there is usually something that needs to be improved or fixed.

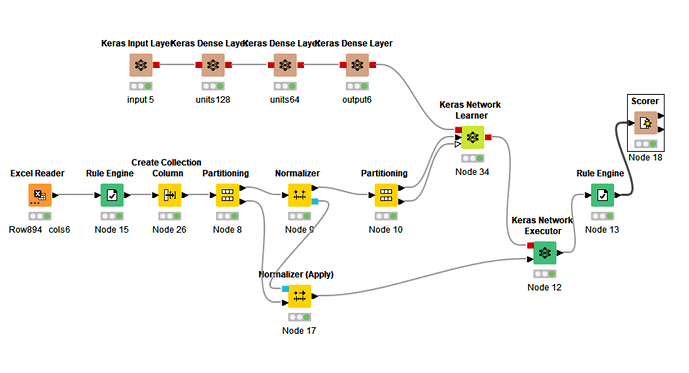

From a quick look at your workflow screenshot, there seems to be a problem in how you pass the data (and what data you pass) to the Keras Network Learner node which causes a doping of the performance on the validation set. The first input data (black arrow) port of the Keras Network Learner should be the output data of the Normalizer node - no additional Partitioning node is needed in between.

If you then want to have test and validation set, you should use the second Partitioning node after the Normalizer (Apply) node and use the upper output port of the Partitioning node to pass the data to the second input data (white arrow) of the Keras Network Learner node. The second output port of the Partitioning node should then be connected to the Keras Network Executor node. See example workflow here: Simple Feed Forward Neural Network for Binary Classification – KNIME Hub.

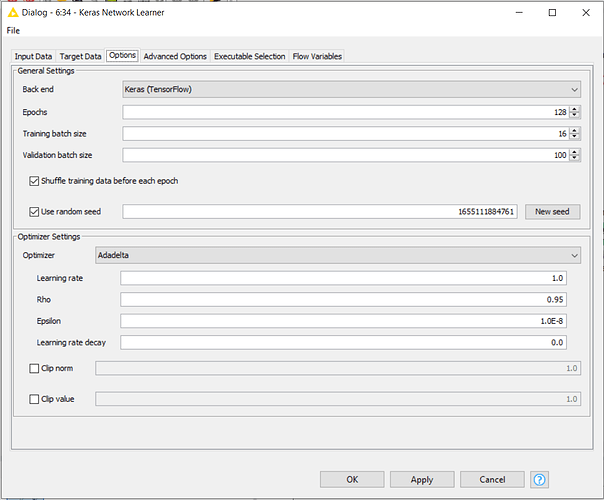

Following this new workflow design will likely affect the overall accuracy on your train and validation set but at least the results are not doped. This means that you can now focus on improving the actual overall accuracy of your model. For example, adding more hidden layers via the Keras Dense Layer node(s), optimizing the number of units (= neurons) per layers using the Parameter Optimization Loop nodes (mind this can be computationally expensive!), or tweaking (or optimizing) the settings of the Keras Network Leaner node (e.g., choice of optimizer, batch size, epochs, etc.).

You might find useful reading about a general set of hints on why a ML does not learn and what to do: My ML Model Won't Learn. What Should I Do? | KNIME.

Hope it helps! Good luck!

Best,

Roberto

This topic was automatically closed 90 days after the last reply. New replies are no longer allowed.