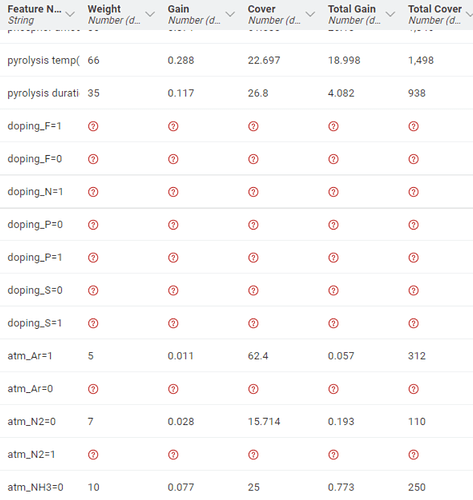

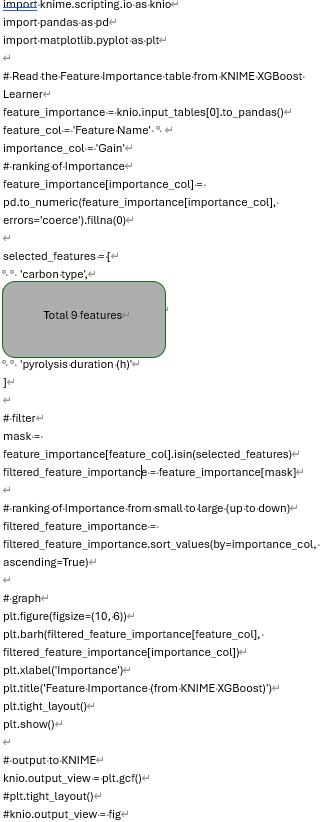

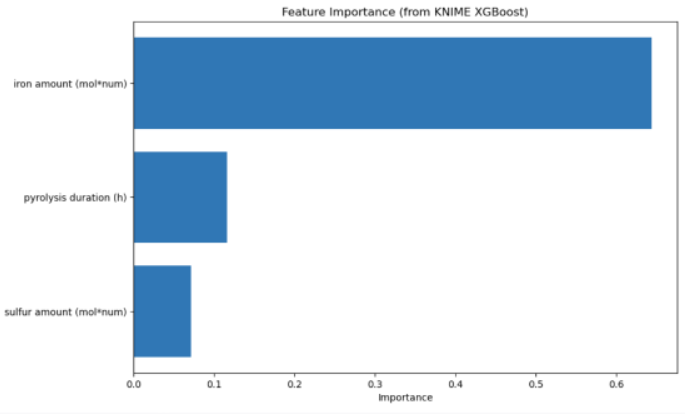

So a big trouble is here>> where I set up XGBoost model (shown below) and I used one hot coding for my data (3 from 12 parameters were one hot). After the model was trained, the table view is shown in the third graph. Important is, for example, the parameter “doping elements” have multiple layers(doping F, doping N…), which there’s no values inside them. however, I wrote Python view for the feature importance and it’s only showed three out of nine features (forth graph). and so far I don’t know what’s wrong with my code and I wanted to show all the 9 features in the graph, not just 3…

And second question is what should I write in my code for feature graph to stop one hot in order to give a feature like “doping element” as one feature, instead of “doping_F”, “doping_N”, “doping_S”…multiple features?

third question is that I have three targets, so I hope there’re 3 feature graphs to each target. is it correct to select the target in the model learner in order to get the corresponded feature graph?

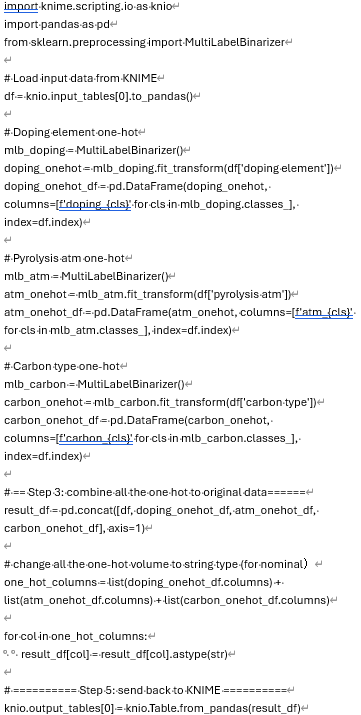

first graph: model

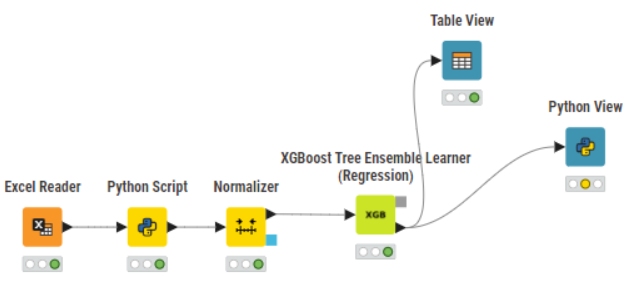

second graph: one hot code to 3 parameters.

third graph: table view from the model

forth graph:

fifth graph: code in python view (for feature importance)