Hi @tone_n_tune ,

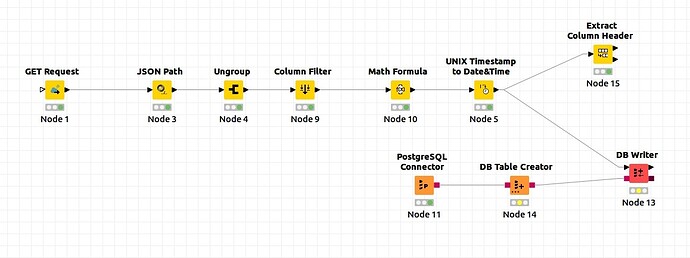

A few comments about your workflow:

You don’t have to link all your workflows with the flow variable port. Depending on why you did this:

- Workflows will follow to the next one that’s linked to it

- Flow variables also will follow

If you linked them because of my suggestion to link your connection node, please take a look at this example:

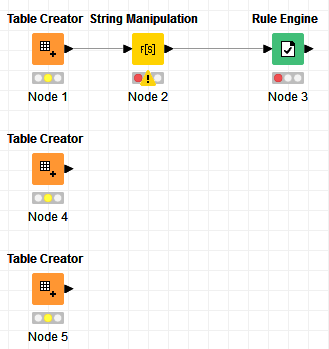

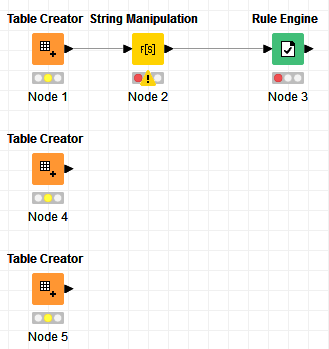

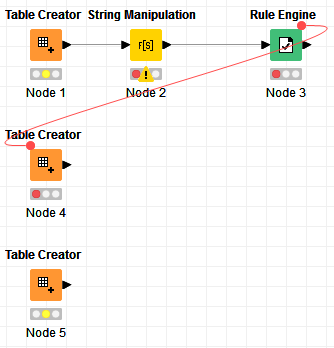

If this workflow is executed, the nodes Node 1, Node 4 and Node 5 will execute first and at the same time, and then Node 2 will follow after Node 1 is done, and then Node 3 after Node 2 is done.

Since Node 4 (like Node 1) does not have any node connected to its left, it will just execute when the workflow is run. Similarly for Node 5. That is why Node 1, Node 4, and node 5 will execute right away when the workflow is executed.

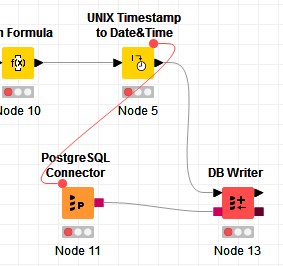

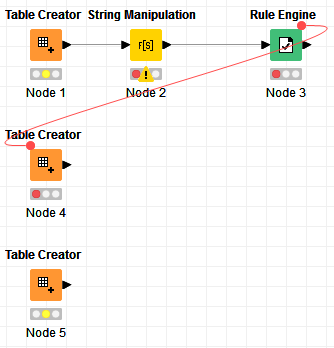

If I want to make Node 4 execute only after Node 3 is done, then I have to link Node 3 to Node 4. Since Node 4 is a Table Creator and does not have a Data input (black triangle), I can only link it via the Flow variable port (all nodes have an input and output flow variable port):

Now, when the workflow is executed, only Node 1 and Node 5 will start executing at first. Node 4 will only execute after Node 3 is done.

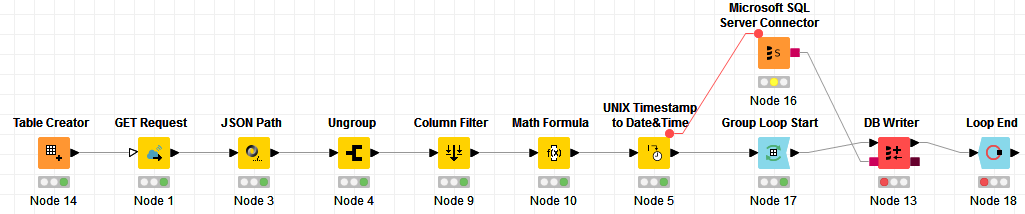

If we apply the same logic to your workflow, you want to establish your db connection only when you are ready to do db operations, or put another way, you want it after all your knime data operations are completed, that is at the end of your Node 5. If you establish your connection as soon as your workflow starts, you risk running into the connection being expired if your knime data operations is long (downloading data via GET Request, manipulating the data after such as Ungroup, etc).

Similarly, like the Table Creator, the DB Connector does not have any input port. So we connect it using the Flow Variable port.

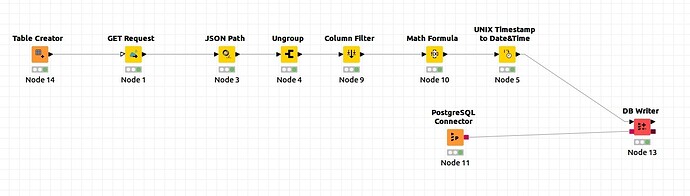

I modified your workflow, and it looks like this now:

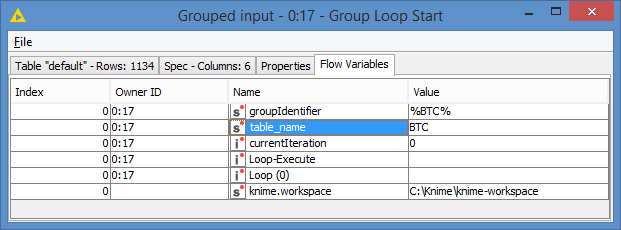

I integrated the Group Loop. It’s grouping by your table_name.

You need to do an additional modification which I cannot do because I don’t have a db connection - meaning you will only be able to do this once you run Node 16 (or any other db connection). This is regarding to dynamically pass a table name to your DB Writer so that it would write the data in different tables per group. You will only need to do this once.

Instructions:

- Execute Node 17

- Execute Node 16

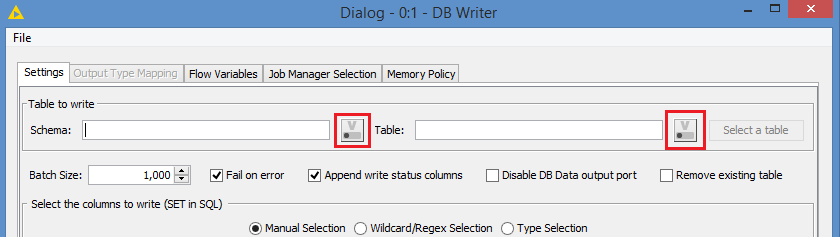

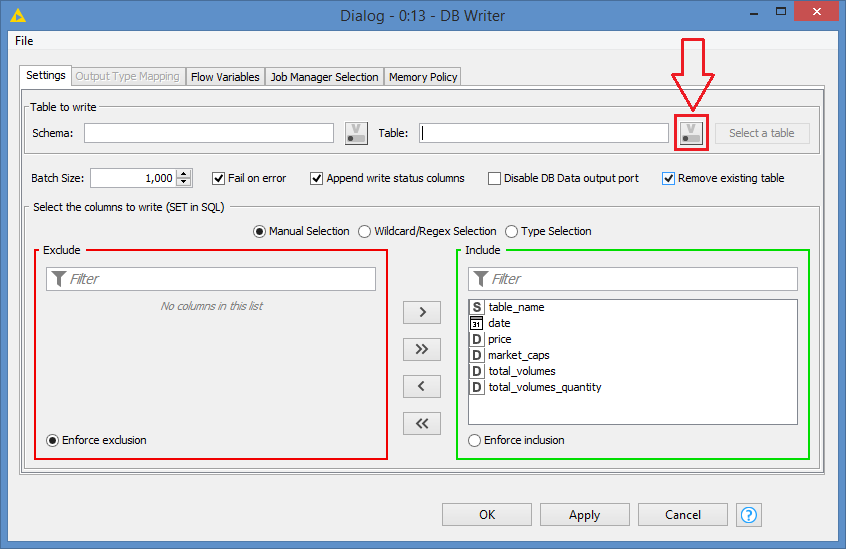

- Open the DB Writer (Node 13)

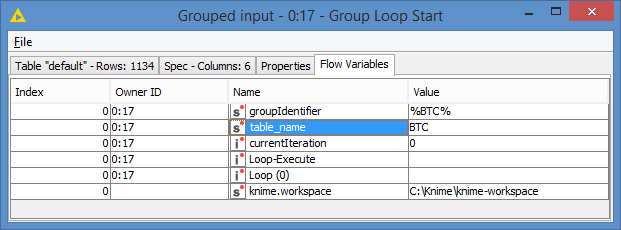

Note: The Group Loop will tell you what’s the value of the group, and this can be confirmed by looking at its Flow variables:

In the DB Writer node, click on this button:

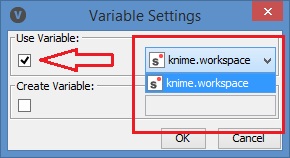

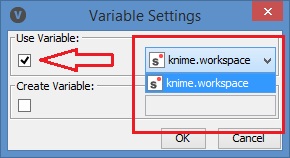

This window will popup:

Check the box as per the image, and then choose “table_name” from the dropdown (That’s the part that I cannot do on my side. I don’t see the variables because I don’t have a valid connection. You should see it if you executed Step 1 and 2)

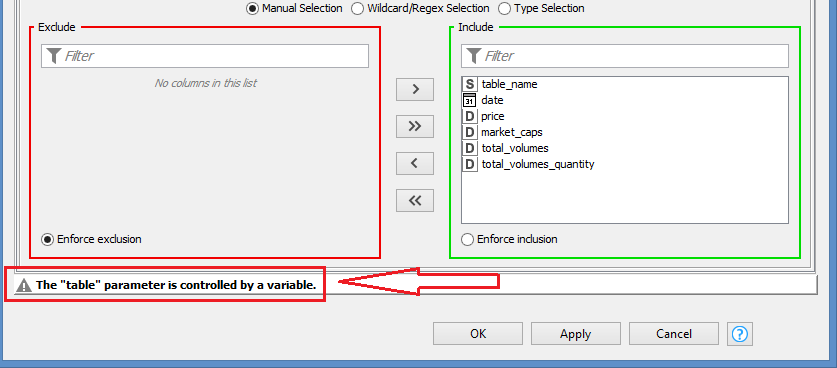

- Click Ok, and you’re back to the DB Writer, and you should see this message at the bottom:

- Click Ok, and all is set

- You can execute the rest of the Loop by running the Loop End (Node 18)

The expected results: it will write the first set of data to table BTC and the second set of data will be written to table ETH as per what you defined in your table.

Here’s the workflow: Export_to_DB_Bruno.knwf (36.6 KB)