Hi @nba , I confess from your post that I’m not entirely sure what you mean by migrating a workflow to a database. It feels like you are trying to treat KNIME like it is a database, but presumably you have a database available already that you are connecting KNIME to.

Yes you can create database tables “on the fly” and alter them (add columns as you go) etc, but that isn’t generally considered good practice.

I didn’t understand question 1. You wouldn’t generally create columns in a database as you are processing. The database is there as a source or destination for your data. Yes you can update, but it would be very inefficient if you had a workflow that created a database table, and then tried to create and populate whole new columns with the results of calculations as it went along. I’d have to better understand your use case to be able to give any kind of satisfactory answer to what you are thinking of here.

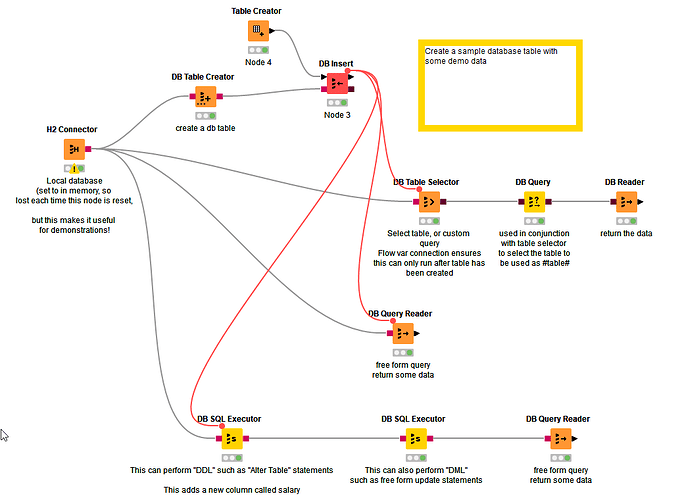

Re Q2, the DB Query node is used in conjunction with a DB Table Selector node. The DB Table Selector node allows you to choose a pre-existing table from the database (or can take a “custom query”) and the resultant table from that node is referenced using the the #table# placeholder. DB Query is one of the “in database” nodes, which has the brown connectors. “In-database” is a slightly misleading term as these nodes are not actually running “inside” the database, but it is a useful analogy because they work together with other “in database” nodes, and don’t return data to KNIME until they are asked to by a node such as “db reader”, which then has a black-triangle connector to return the results.

So in simple terms, to use a “DB Query” node, you put a “DB Table Selector” in front of it and a DB Reader" after it.

The non-“in database” equivalent is the DB Query Reader which just runs a query against the database and returns a result, which sounds more like what you are wanting to do at this point.

As for Q3, the ALTER TABLE statement you have to add a new column to a database table is what is known as “DDL” (data definition language), in contrast to “DML” which is “Data Manipulation Language”.

DML in general database terms covers the statements as Select, Update, Insert and Delete. But actually, it is better to think of “Select” as DQL “Data Query Language” as it doesn’t modify anything, and then treat Update, Insert and Delete as DML, because they do modify your data,

DDL pretty much covers everything else “Create Table”, “Alter Table”, “Drop Table” etc.

If you think of it in those terms, then DQL (“select”) is what you can put it in a DB Query or DB Query Reader node.

If you are running anything else DDL/DML, you will want to put such statements into a DB SQL Executor node, rather than into a query node.

I have attached a workflow to demonstrate some of the different uses of various DB nodes mentioned above. I hope you find it useful to gain a basic understanding of how those nodes operate.

I’ve adjusted it to include flow variable connectors from the DB Insert statement. This ensures that subsequent nodes can only be executed after the demo database table “EXAMPLE” has been created and populated with some sample data.

I think though that from your questions you have a few misconceptions about how you would work with a database. Feel free to ask further questions but I feel you need to take a step back and better explain your use case because it doesn’t feel to me like the solution you are trying to achieve is a good answer to whatever the question is

DB demo.knwf (29.9 KB)