Hi Bjorn,

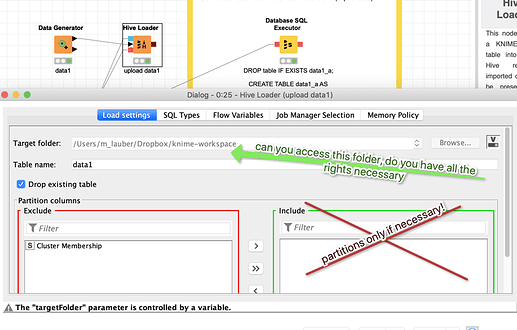

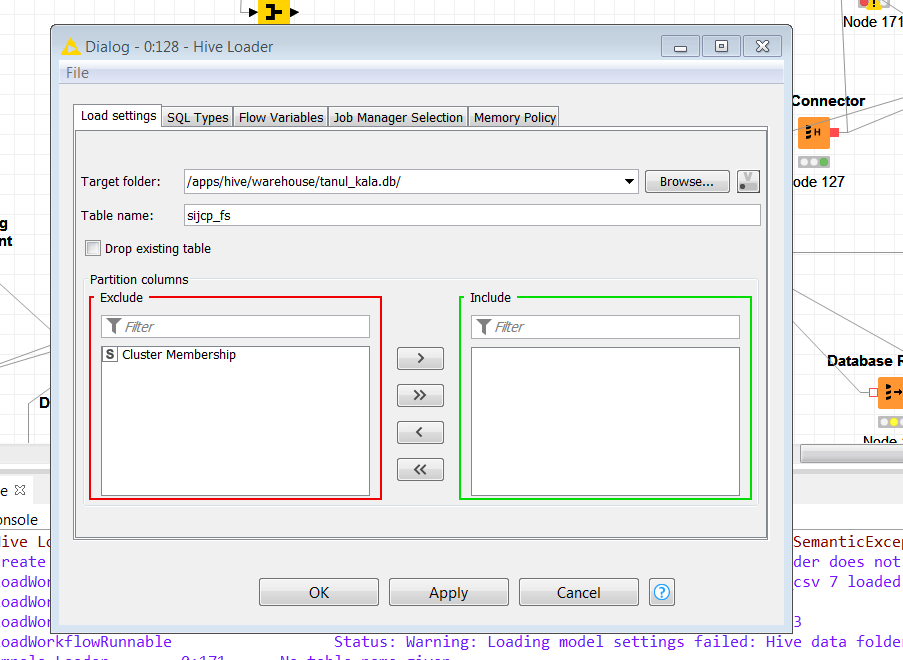

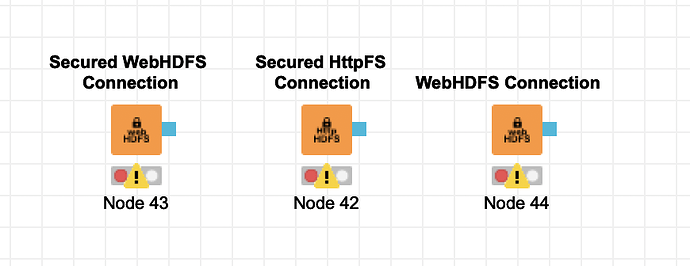

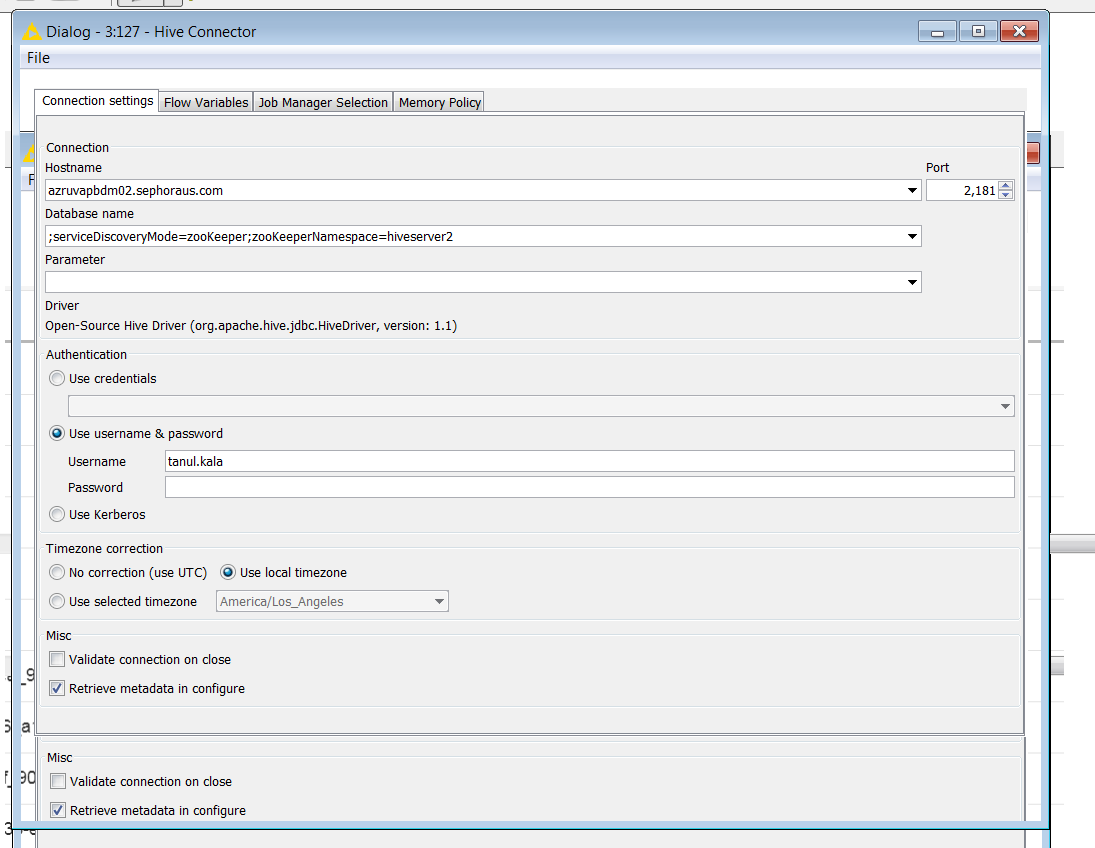

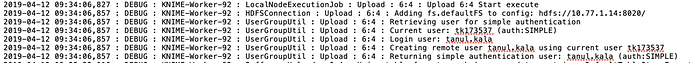

I am experiencing a similar problem where I’m trying to load data into hive using hive loader but it’s creating a file on the hdfs/httpFS location but no table and the connection gets timed out. Below is the knime log:

2019-04-09 13:54:01,652 : DEBUG : main : ExecuteAction : : : Creating execution job for 1 node(s)…

2019-04-09 13:54:01,652 : DEBUG : main : NodeContainer : : : Hive Loader 0:128 has new state: CONFIGURED_MARKEDFOREXEC

2019-04-09 13:54:01,652 : DEBUG : main : NodeContainer : : : Hive Loader 0:128 has new state: CONFIGURED_QUEUED

2019-04-09 13:54:01,662 : DEBUG : main : NodeContainer : : : KNIME_project 0 has new state: EXECUTING

2019-04-09 13:54:01,669 : DEBUG : KNIME-WFM-Parent-Notifier : NodeContainer : : : ROOT has new state: EXECUTING

2019-04-09 13:54:01,670 : DEBUG : KNIME-Worker-8 : WorkflowManager : Hive Loader : 0:128 : Hive Loader 0:128 doBeforePreExecution

2019-04-09 13:54:01,670 : DEBUG : KNIME-Worker-8 : NodeContainer : Hive Loader : 0:128 : Hive Loader 0:128 has new state: PREEXECUTE

2019-04-09 13:54:01,670 : DEBUG : KNIME-Worker-8 : WorkflowManager : Hive Loader : 0:128 : Hive Loader 0:128 doBeforeExecution

2019-04-09 13:54:01,670 : DEBUG : KNIME-Worker-8 : NodeContainer : Hive Loader : 0:128 : Hive Loader 0:128 has new state: EXECUTING

2019-04-09 13:54:01,670 : DEBUG : KNIME-Worker-8 : WorkflowDataRepository : Hive Loader : 0:128 : Adding handler 386db7b8-a113-4131-8b21-686de640d906 (Hive Loader 0:128: ) - 2 in total

2019-04-09 13:54:01,670 : DEBUG : KNIME-Worker-8 : LocalNodeExecutionJob : Hive Loader : 0:128 : Hive Loader 0:128 Start execute

2019-04-09 13:54:01,898 : DEBUG : KNIME-Worker-8 : AbstractLoaderNodeModel : Hive Loader : 0:128 : Start writing KNIME table to temporary file C:\Users\tk173537\AppData\Local\Temp\knime_KNIME_project27443\hive-importc3cd3bf4_0a9b_483b_b19c_8575299a1e815218807826069089374.csv

2019-04-09 13:54:01,944 : DEBUG : KNIME-Worker-8 : AbstractLoaderNodeModel : Hive Loader : 0:128 : Table structure name=default,columns=[sas_id]

2019-04-09 13:54:01,944 : DEBUG : KNIME-Worker-8 : AbstractLoaderNodeModel : Hive Loader : 0:128 : No of rows to write 100.0

2019-04-09 13:54:02,027 : DEBUG : KNIME-Worker-8 : AbstractTableStoreReader : Hive Loader : 0:128 : Opening input stream on file “C:\Users\tk173537\AppData\Local\Temp\knime_KNIME_project27443\knime_container_20190409_2498813877045765933.tmp”, 0 open streams

2019-04-09 13:54:02,056 : DEBUG : KNIME-Worker-8 : AbstractTableStoreReader : Hive Loader : 0:128 : Closing input stream on “C:\Users\tk173537\AppData\Local\Temp\knime_KNIME_project27443\knime_container_20190409_2498813877045765933.tmp”, 0 remaining

2019-04-09 13:54:02,056 : DEBUG : KNIME-Worker-8 : AbstractLoaderNodeModel : Hive Loader : 0:128 : Temporary file successful created at C:\Users\tk173537\AppData\Local\Temp\knime_KNIME_project27443\hive-importc3cd3bf4_0a9b_483b_b19c_8575299a1e815218807826069089374.csv

2019-04-09 13:54:02,056 : DEBUG : KNIME-Worker-8 : HiveLoader : Hive Loader : 0:128 : Uploading local file C:\Users\tk173537\AppData\Local\Temp\knime_KNIME_project27443\hive-importc3cd3bf4_0a9b_483b_b19c_8575299a1e815218807826069089374.csv

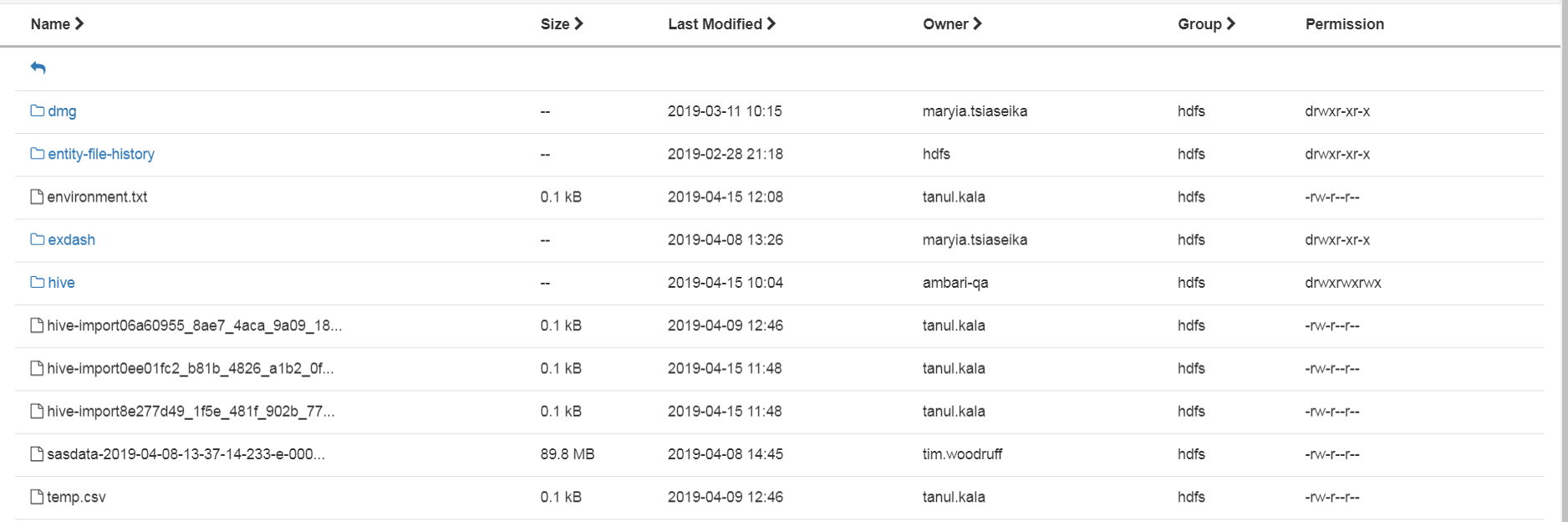

2019-04-09 13:54:02,057 : DEBUG : KNIME-Worker-8 : HiveLoader : Hive Loader : 0:128 : Create remote folder with URI httpfs://tanul.kala@azruvapbdm02.sephoraus.com:50070/apps/hive/warehouse/tanul_kala.db/

2019-04-09 13:54:02,128 : DEBUG : KNIME-Worker-8 : HDFSConnection : Hive Loader : 0:128 : Adding fs.defaultFS to config: webhdfs://azruvapbdm02.sephoraus.com:50070/

2019-04-09 13:54:02,129 : DEBUG : KNIME-Worker-8 : UserGroupUtil : Hive Loader : 0:128 : Retrieving user for simple authentication

2019-04-09 13:54:02,129 : DEBUG : KNIME-Worker-8 : UserGroupUtil : Hive Loader : 0:128 : Current user: tk173537 (auth:SIMPLE)

2019-04-09 13:54:02,129 : DEBUG : KNIME-Worker-8 : UserGroupUtil : Hive Loader : 0:128 : Login user: tanul.kala

2019-04-09 13:54:02,129 : DEBUG : KNIME-Worker-8 : UserGroupUtil : Hive Loader : 0:128 : Creating remote user tanul.kala using current user tk173537

2019-04-09 13:54:02,129 : DEBUG : KNIME-Worker-8 : UserGroupUtil : Hive Loader : 0:128 : Returning simple authentication user: tanul.kala (auth:SIMPLE)

2019-04-09 13:54:02,320 : DEBUG : KNIME-Worker-8 : HiveLoader : Hive Loader : 0:128 : Remote folder created

2019-04-09 13:54:02,320 : DEBUG : KNIME-Worker-8 : HiveLoader : Hive Loader : 0:128 : Create remote file with URI httpfs://tanul.kala@azruvapbdm02.sephoraus.com:50070/apps/hive/warehouse/tanul_kala.db/hive-importc3cd3bf4_0a9b_483b_b19c_8575299a1e815218807826069089374.csv

2019-04-09 13:54:02,320 : DEBUG : KNIME-Worker-8 : HiveLoader : Hive Loader : 0:128 : Remote file created. Start writing file content…

2019-04-09 13:54:14,970 : DEBUG : main : NodeContainerEditPart : : : Hive Loader 0:128 (EXECUTING)

2019-04-09 13:54:14,970 : DEBUG : main : NodeContainerEditPart : : : HttpFS Connection 0:167 (EXECUTED)

2019-04-09 13:54:17,041 : DEBUG : main : NodeContainerEditPart : : : HttpFS Connection 0:167 (EXECUTED)

2019-04-09 13:54:17,042 : DEBUG : main : NodeContainerEditPart : : : WebHDFS Connection 0:148 (EXECUTED)

2019-04-09 13:54:23,468 : DEBUG : KNIME-Worker-8 : Node : Hive Loader : 0:128 : reset

2019-04-09 13:54:23,468 : ERROR : KNIME-Worker-8 : Node : Hive Loader : 0:128 : Execute failed: azruvapbdd26:50075: Connection timed out: connect

2019-04-09 13:54:23,470 : DEBUG : KNIME-Worker-8 : Node : Hive Loader : 0:128 : Execute failed: azruvapbdd26:50075: Connection timed out: connect

java.net.ConnectException: azruvapbdd26:50075: Connection timed out: connect

at java.net.DualStackPlainSocketImpl.waitForConnect(Native Method)

at java.net.DualStackPlainSocketImpl.socketConnect(DualStackPlainSocketImpl.java:85)

at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:350)

at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:206)

at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:188)

at java.net.PlainSocketImpl.connect(PlainSocketImpl.java:172)

at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392)

at java.net.Socket.connect(Socket.java:589)

at sun.net.NetworkClient.doConnect(NetworkClient.java:175)

at sun.net.www.http.HttpClient.openServer(HttpClient.java:463)

at sun.net.www.http.HttpClient.openServer(HttpClient.java:558)

at sun.net.www.http.HttpClient.(HttpClient.java:242)

at sun.net.www.http.HttpClient.New(HttpClient.java:339)

at sun.net.www.http.HttpClient.New(HttpClient.java:357)

at sun.net.www.protocol.http.HttpURLConnection.getNewHttpClient(HttpURLConnection.java:1220)

at sun.net.www.protocol.http.HttpURLConnection.plainConnect0(HttpURLConnection.java:1156)

at sun.net.www.protocol.http.HttpURLConnection.plainConnect(HttpURLConnection.java:1050)

at sun.net.www.protocol.http.HttpURLConnection.connect(HttpURLConnection.java:984)

at org.apache.hadoop.hdfs.web.WebHdfsFileSystem$AbstractRunner.connect(WebHdfsFileSystem.java:597)

at org.apache.hadoop.hdfs.web.WebHdfsFileSystem$AbstractRunner.connect(WebHdfsFileSystem.java:554)

at org.apache.hadoop.hdfs.web.WebHdfsFileSystem$AbstractRunner.runWithRetry(WebHdfsFileSystem.java:622)

at org.apache.hadoop.hdfs.web.WebHdfsFileSystem$AbstractRunner.access$100(WebHdfsFileSystem.java:472)

at org.apache.hadoop.hdfs.web.WebHdfsFileSystem$AbstractRunner$1.run(WebHdfsFileSystem.java:502)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1758)

at org.apache.hadoop.hdfs.web.WebHdfsFileSystem$AbstractRunner.run(WebHdfsFileSystem.java:498)

at org.apache.hadoop.hdfs.web.WebHdfsFileSystem.create(WebHdfsFileSystem.java:1186)

at org.apache.hadoop.fs.FileSystem.create(FileSystem.java:910)

at org.apache.hadoop.fs.FileSystem.create(FileSystem.java:891)

at org.apache.hadoop.fs.FileSystem.create(FileSystem.java:788)

at org.knime.bigdata.hdfs.filehandler.HDFSConnection.create(HDFSConnection.java:315)

at org.knime.bigdata.hdfs.filehandler.HDFSRemoteFile.openOutputStream(HDFSRemoteFile.java:115)

at org.knime.base.filehandling.remote.files.RemoteFile.write(RemoteFile.java:342)

at org.knime.bigdata.hive.utility.HiveLoader.uploadFile(HiveLoader.java:93)

at org.knime.bigdata.hive.utility.AbstractLoaderNodeModel.execute(AbstractLoaderNodeModel.java:267)

at org.knime.core.node.NodeModel.executeModel(NodeModel.java:567)

at org.knime.core.node.Node.invokeFullyNodeModelExecute(Node.java:1186)

at org.knime.core.node.Node.execute(Node.java:973)

at org.knime.core.node.workflow.NativeNodeContainer.performExecuteNode(NativeNodeContainer.java:559)

at org.knime.core.node.exec.LocalNodeExecutionJob.mainExecute(LocalNodeExecutionJob.java:95)

at org.knime.core.node.workflow.NodeExecutionJob.internalRun(NodeExecutionJob.java:179)

at org.knime.core.node.workflow.NodeExecutionJob.run(NodeExecutionJob.java:110)

at org.knime.core.util.ThreadUtils$RunnableWithContextImpl.runWithContext(ThreadUtils.java:328)

at org.knime.core.util.ThreadUtils$RunnableWithContext.run(ThreadUtils.java:204)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at org.knime.core.util.ThreadPool$MyFuture.run(ThreadPool.java:123)

at org.knime.core.util.ThreadPool$Worker.run(ThreadPool.java:246)

2019-04-09 13:54:23,471 : DEBUG : KNIME-Worker-8 : WorkflowManager : Hive Loader : 0:128 : Hive Loader 0:128 doBeforePostExecution

2019-04-09 13:54:23,471 : DEBUG : KNIME-Worker-8 : NodeContainer : Hive Loader : 0:128 : Hive Loader 0:128 has new state: POSTEXECUTE

2019-04-09 13:54:23,471 : DEBUG : KNIME-Worker-8 : WorkflowManager : Hive Loader : 0:128 : Hive Loader 0:128 doAfterExecute - failure

2019-04-09 13:54:23,472 : DEBUG : KNIME-Worker-8 : Node : Hive Loader : 0:128 : reset

2019-04-09 13:54:23,472 : DEBUG : KNIME-Worker-8 : Node : Hive Loader : 0:128 : clean output ports.

2019-04-09 13:54:23,472 : DEBUG : KNIME-Worker-8 : WorkflowDataRepository : Hive Loader : 0:128 : Removing handler 386db7b8-a113-4131-8b21-686de640d906 (Hive Loader 0:128: ) - 1 remaining

2019-04-09 13:54:23,472 : DEBUG : KNIME-Worker-8 : NodeContainer : Hive Loader : 0:128 : Hive Loader 0:128 has new state: IDLE

2019-04-09 13:54:23,493 : DEBUG : KNIME-Worker-8 : Node : Hive Loader : 0:128 : Configure succeeded. (Hive Loader)

2019-04-09 13:54:23,494 : DEBUG : KNIME-Worker-8 : NodeContainer : Hive Loader : 0:128 : Hive Loader 0:128 has new state: CONFIGURED

2019-04-09 13:54:23,494 : DEBUG : KNIME-Worker-8 : NodeContainer : Hive Loader : 0:128 : KNIME_project 0 has new state: IDLE

2019-04-09 13:54:23,494 : DEBUG : KNIME-WFM-Parent-Notifier : NodeContainer : : : ROOT has new state: IDLE

-Tanul