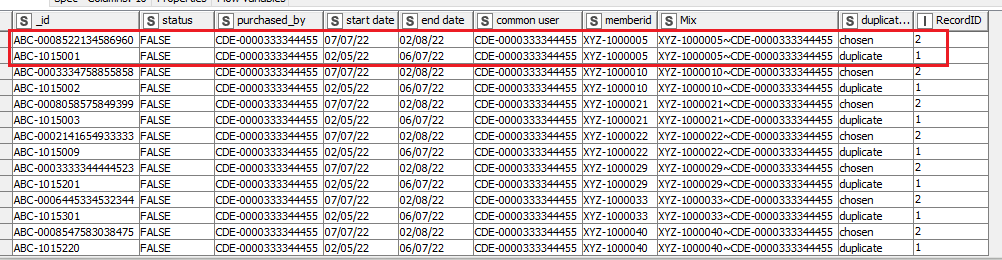

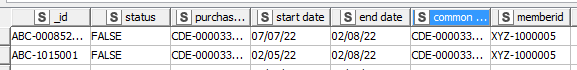

From looking at your original post, it looks like you are trying to do something like “if a row is missing RecordID, increment the RecordID from the previous member of the same group by one, otherwise use 1.”

If so, one of the problems may be that using the row offset in column expression node only returns values from the input table, it won’t return a new column or updated value that happened in the node itself.

Given an input table like this:

| Ordering |

Group |

RecordID |

| 1 |

A |

1 |

| 2 |

A |

2 |

| 3 |

A |

? |

| 4 |

A |

? |

| 5 |

B |

3 |

| 6 |

B |

1 |

| 7 |

B |

? |

| 8 |

C |

5 |

| 9 |

C |

6 |

| 10 |

C |

? |

| 11 |

C |

? |

| 12 |

D |

7 |

| 13 |

D |

? |

| 14 |

D |

? |

| 15 |

E |

? |

One possiblity would be to update the RecordID with a column expression like:

if (column(“RecordID”)==missingValue())

{

if (column(“Group”)!=column(“Group”,-1))

{1}

else

{

if(column(“RecordID”,-1)!=missingValue())

{column(“RecordID”,-1)+1}

}

}

else

{column(“RecordID”)}

Assuming your input is sorted by group, this will return the existing RecordID if there is one. If there isn’t it will return a 1 if it’s the first item in a group, otherwise it will increment the previous row’s RecordID.

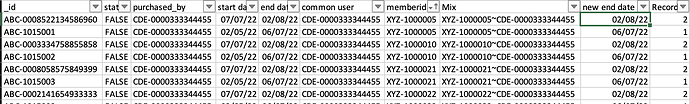

The only problem is that if there are multiple missing values, one after the other, it will only fill the first one. But you can use a recursive loop to run the same column expression node repeatedly until every value is filled in. It’s still using a loop, but I’m pretty sure it will be faster than a group loop.

This is what I ended up with:

| Ordering |

Group |

RecordID |

| 1 |

A |

1 |

| 2 |

A |

2 |

| 3 |

A |

3 |

| 4 |

A |

4 |

| 5 |

B |

3 |

| 6 |

B |

1 |

| 7 |

B |

2 |

| 8 |

C |

5 |

| 9 |

C |

6 |

| 10 |

C |

7 |

| 11 |

C |

8 |

| 12 |

D |

7 |

| 13 |

D |

8 |

| 14 |

D |

9 |

| 15 |

E |

1 |

All the missing RecordID values were filled in after 2 iterations.

You may be able to use a similar approach to make other formulae work.

Depending on what you are trying to do, a Rank node might also be useful. It would let you enumerate items within a group, and you could then perform calculations using those numbers.