@fath1 first I think you will have to sort out the thing with your test and training data. This seems strange.

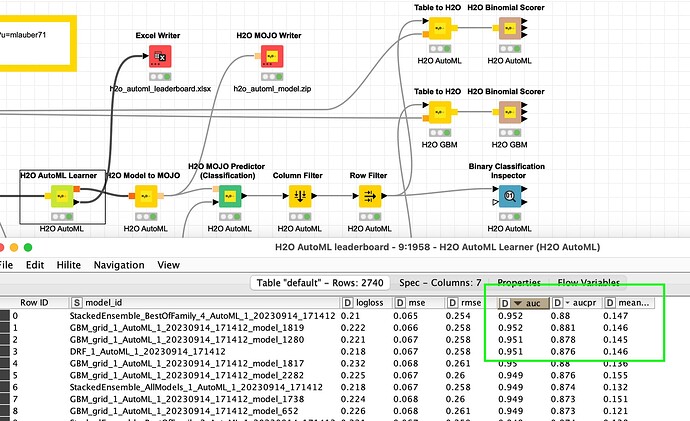

When you just use the training data and split them and train a model you get quite decent values with GBM models. Since this data is unbalanced you might opt for AUCPR as the deciding metric if you want to focus on the top group of Active=1.

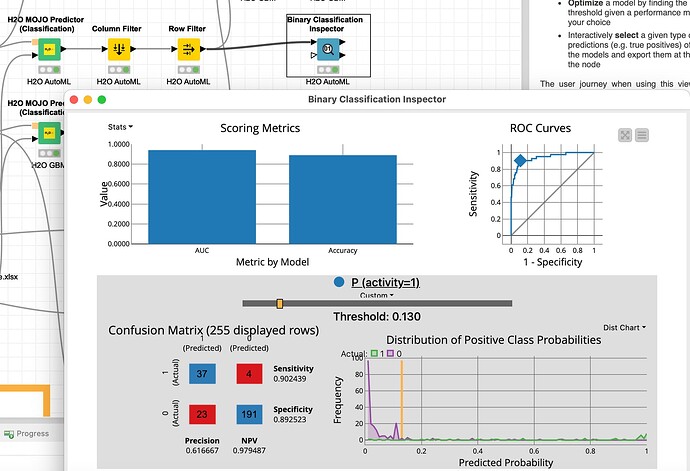

You will have to think about what precision you want to accept and where to put the threshold - this very much depends on what happens to the wrongly classified cases. In this example: what would it mean if the 23 cases which are considered by the model to be 1 are in fact 0 and you would have acted upon the decision.

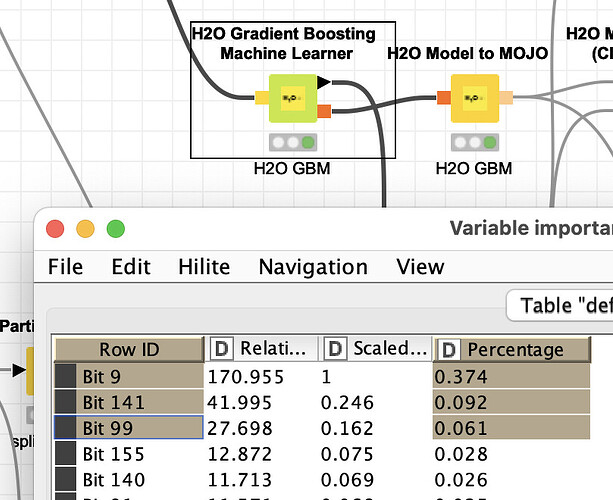

Then you might want to take a look at the data itself. I have no idea about the (business/scientific) domain of the case. With a quick GBM model 3 “Bits” take a significant amount of ‘explaining’ power. You might want to check with experts if this does make any sense. “Bit 9” is very prominent.

Since you have some 150+ variables you might think about some data engineering and data preparation. Maybe bring the number down to half of them. I have written an article about how to do that in an automatic way with the help of vtreat, but there are other techniques of dimension reduction (thread 1, thread 2).

More ideas being discussed here:

The workflow used with your data is here: