Hello KNIMErs,

Here is my solution to #justknimeit-17 :

KNIME Hub > gonhaddock > Spaces > Just_KNIME_It > Just KNIME It _ Challenge 017

Despite I’m trying to catch up with the challenges, once again enjoyed the take on it.

Aiming to simplify the analytical approach, I just filtered the dataset for the closing year prices (this is filtering by including only December months); some more work could be done to reduce the gaps…

Afterwards to normalize observations avoiding pricing bias, I computed the yearly variation (from previous year) in percentage.

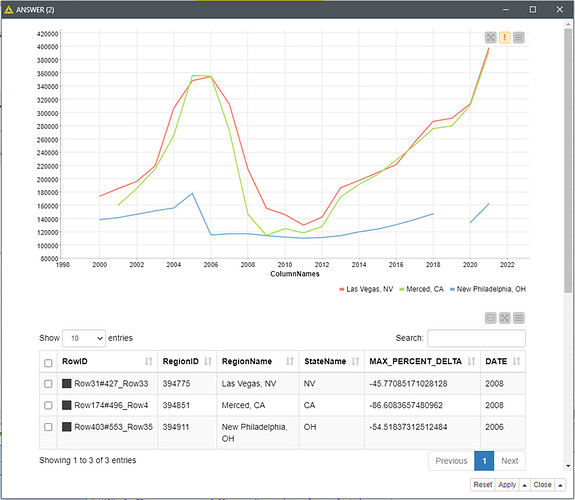

Q2.- The initial Max. variations gave me a bored answer with similar behavior of three California Regions. Then I confronted the Max. variation champion from the different States, displaying some more flavour for the different State ways.

New Philadelphia OH (in blue) was strongly affected by the the 2003–2006 house price boom and subsequent collapse already in 2006, but didn’t noticed the LB’s bankruptcy in 2008; probably due to the lower housing prices (see the map in Q3).

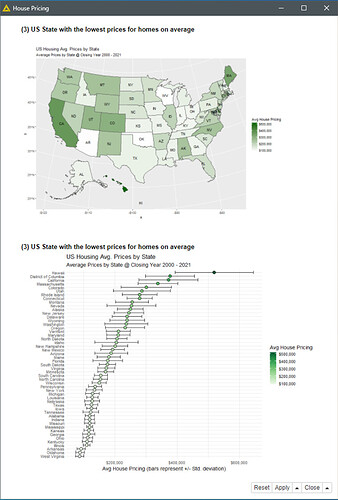

Q3.- To answer the easy question, I just took the opportunity to design a very intuitive qualitative dashboard with R. The answer by the way based in my data is West Virginia (WV).

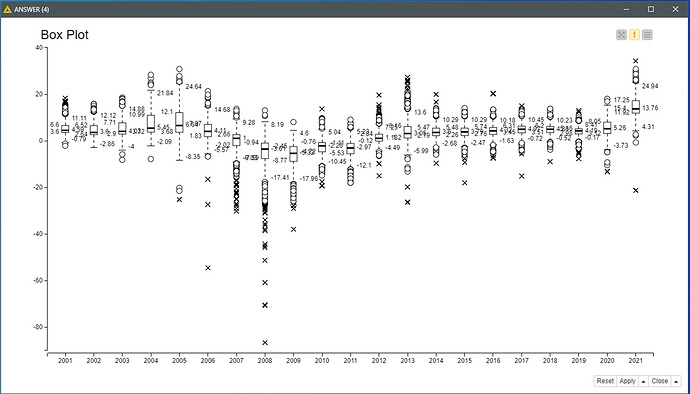

Q4.- Despite it can be explained based in Q2 visualization as suggested in the challenge description; I’ve tried a different busy one with the percent (state max) price evolutions, aiming to highlight the drama.

BR