Hi Armin,

here you go. Let the Knime-Drag-Race beign!

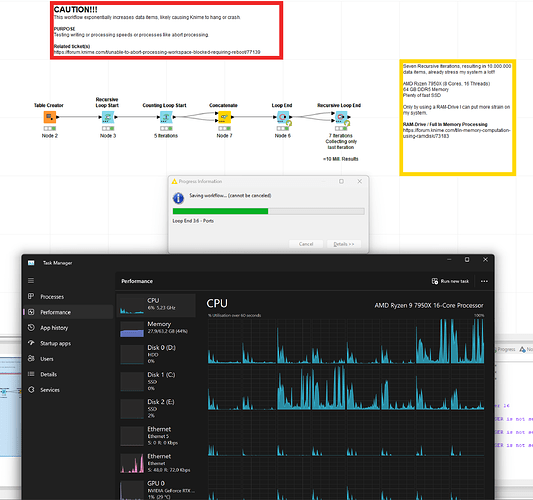

I tested my systems limits and 10 Million data points seems to be the limit where it starts to struggle executing the workflow. Worth to mention that this performance issue was raised in other posts before.

Interestingly, though, several posts mention the arbitrary “sound barrier” of around 10 Million rows. Feels too much of a coincidence and I do recall, which dates back several major Knime versions by now, that handling several tens of million rows with hundreds of columns wasn’t even a problem on my old Mac Book Pro from 2016 with a mere 16 GB of memory.

Increase the count in the recursive loop end for greater steps and the one of the counting loop for fine granularity.

By the way, here is my system and knime config. I also tested different Xmx settings and Knime copes well with 2 and 50 GB ram allocation showing only marginal performance differences executing the workflow.

-startup

plugins/org.eclipse.equinox.launcher_1.6.400.v20210924-0641.jar

--launcher.library

plugins/org.eclipse.equinox.launcher.win32.win32.x86_64_1.2.700.v20221108-1024

-vm

plugins/org.knime.binary.jre.win32.x86_64_17.0.5.20221116/jre/bin/server/jvm.dll

--launcher.defaultAction

openFile

-vmargs

-Djava.security.properties=plugins/org.knime.binary.jre.win32.x86_64_17.0.5.20221116/security.properties

-Dorg.apache.cxf.bus.factory=org.knime.cxf.core.fragment.KNIMECXFBusFactory

-Djdk.httpclient.allowRestrictedHeaders=content-length

-Darrow.enable_unsafe_memory_access=true

-Darrow.memory.debug.allocator=false

-Darrow.enable_null_check_for_get=false

--add-opens=java.security.jgss/sun.security.jgss.krb5=ALL-UNNAMED

--add-exports=java.security.jgss/sun.security.jgss=ALL-UNNAMED

--add-exports=java.security.jgss/sun.security.jgss.spi=ALL-UNNAMED

--add-exports=java.security.jgss/sun.security.krb5.internal=ALL-UNNAMED

--add-exports=java.security.jgss/sun.security.krb5=ALL-UNNAMED

--add-opens=java.xml/com.sun.org.apache.xerces.internal.parsers=ALL-UNNAMED

--add-opens=java.xml/com.sun.org.apache.xerces.internal.util=ALL-UNNAMED

-Djdk.httpclient.allowRestrictedHeaders=content-length

-Dorg.apache.cxf.bus.factory=org.knime.cxf.core.fragment.KNIMECXFBusFactory

-Dorg.apache.cxf.transport.http.forceURLConnection=true

-server

-Dsun.java2d.d3d=false

-Dosgi.classloader.lock=classname

-XX:+UnlockDiagnosticVMOptions

-Dsun.net.client.defaultReadTimeout=0

-XX:CompileCommand=exclude,javax/swing/text/GlyphView,getBreakSpot

-Dknime.xml.disable_external_entities=true

-Dcomm.disable_dynamic_service=true

--add-opens=java.base/java.lang=ALL-UNNAMED

--add-opens=java.base/java.lang.invoke=ALL-UNNAMED

--add-opens=java.base/java.net=ALL-UNNAMED

--add-opens=java.base/java.nio=ALL-UNNAMED

--add-opens=java.base/java.nio.channels=ALL-UNNAMED

--add-opens=java.base/java.util=ALL-UNNAMED

--add-opens=java.base/sun.nio.ch=ALL-UNNAMED

--add-opens=java.base/sun.nio=ALL-UNNAMED

--add-opens=java.desktop/javax.swing.plaf.basic=ALL-UNNAMED

--add-opens=java.base/sun.net.www.protocol.http=ALL-UNNAMED

--add-opens=java.base/sun.net.www.protocol.https=ALL-UNNAMED

-Xmx50g

-Dorg.eclipse.swt.browser.IEVersion=11001

-Dsun.awt.noerasebackground=true

-Dequinox.statechange.timeout=30000

-Dorg.knime.container.cellsinmemory=10000000

-Dknime.compress.io=false

- AMD Ryzen 7950X (16 Cores, 32 Threads)

- 64 GB DDR5 6000 Mhz (tCAS-tRCD-tRP-tRAS): 32-38-38-96)

Note: Pretty fast memory which Ryzen needs to not “choke” itself - Two NVMe (PCIe x4 16.0 GT/s @ x4 16.0 GT/s) WD Black SN850X 2TB each

Note: The do not saturate the PCIe Gen5 lanes directly hocked up to the CPU! - NVIDIA GeForce RTX 3080 Ti with 12 GB GDDR6X (PCIe v4.0 x16 (16.0 GT/s) @ x16 (5.0 GT/s))

Note. Not saturating the PCIe Gen 5 lanes - Windows 11 Pro

Note: TRIM enabled, Debloated so all stubborn Microsoft and other bloatware got removed and more tuning done.

The saving process seems to be divided into several steps as upon triggering it, CPU but not Disk usage increases letting me to assume compression is happening. Though, I’d believe all data is already available and compressed in Knime tables or so. Disk usage actually never spikes during save.

PPS: I have my workspace located on the 2nd SSD separate from the OS. Still, I see data being cached in the users cache folder. That further strengthens the approach to split disk load but equally raises the question of possible data transfer bottle necks. Worth to note that disk usage didn’t exceed 1 % and I tried putting strain on disk utilization before.

Best

Mike