I usually run large and complex workflows that orchestrate many database reading & writing and data modifications performing nodes. In my typical workflow, tens of nodes execute either in parallel or in loops so I usually end up with hundreds of nodes executed at the moment my workflow finishes. In most cases it was hardly usable to solving problems investigating log file KNIME Analytics Platform produces since it doesn’t cover all the workflow.

I learnt KNIME writes log entries to a knime.log file until its size reaches 10MB. After that, it archives its content to a file knime.log.old.gz and continues writing entries to recreated knime.log file. Since it always re-write the archive file all former entries are lost. I have log level set set to DEBUG which is crucial for me if I want to find information I need. The conditions I’ve just described causes I never have complete log of whole workflow invocation.

What I would need is a possibility to configure log file rotation the way you know from logging framework. For instance in Log4J, you can set a size of single logging file (OK, 10M is OK) and number of old log files to keep, which is exactly what I miss in KNIME.

As a workaround I got used to forwarding the log file KNIME produces to a catch-all file using terminal command:

tail -F knime.log | tee knime.permanent.log

This ensures I have all log entries available after my workflow finishes.

Hi @jan_lender,

you can use a custom log4j config as described in this post:

best,

Gabriel

You can adjust the maximum log file size with the system property knime.logfile.maxsize in the knime.ini. It takes value suffixed with “m” (for megabytes), e.g -Dknime.logfile.maxsize=100m.

Hi Gabriel,

Thank you for your reply. Is this solution applicable for KNIME IDE or is it relevant for batch execution only? I tried adding my log4j.xml refference as a -Dlog4j.configuration param to my knime.ini file but it didn’t seem to change anything.

This is content of my /Applications/KNIME 3.6.0.app/Contents/Eclipse/Knime.ini

-startup

../Eclipse/plugins/org.eclipse.equinox.launcher_1.4.0.v20161219-1356.jar

--launcher.library

../Eclipse/plugins/org.eclipse.equinox.launcher.cocoa.macosx.x86_64_1.1.551.v20171108-1834

-vm

../Eclipse/plugins/org.knime.binary.jre.macosx.x86_64_1.8.0.152-02/jre/Home/lib/jli/libjli.dylib

-vmargs

-server

-Dsun.java2d.d3d=false

-Dosgi.classloader.lock=classname

-XX:+UnlockDiagnosticVMOptions

-XX:+UnsyncloadClass

-Dsun.net.client.defaultReadTimeout=0

-XX:CompileCommand=exclude,javax/swing/text/GlyphView,getBreakSpot

-Xmx4g

-XstartOnFirstThread

-Xdock:icon=../Resources/Knime.icns

-Dorg.eclipse.swt.internal.carbon.smallFonts

-Dknime.database.fetchsize=1000

-Dlog4j.configuration=/etc/knime/log4j.xml

This is content of /etc/knime/log4j.xml

<?xml version="1.0" encoding="UTF-8"?>

<log4j:configuration xmlns:log4j="http://jakarta.apache.org/log4j/"

debug="true">

<appender name="knime" class="org.apache.log4j.RollingFileAppender">

<param name="Encoding" value="UTF-8" />

<param name="file" value="/var/log/knime/ide/knime.log" />

<param name="MaxFileSize" value="50MB" />

<param name="MaxBackupIndex" value="10" />

<layout class="org.apache.log4j.PatternLayout">

<param name="ConversionPattern" value="%d{yyyy-MM-dd'T'HH:mm:ss.S} %-5p %t %c - %m%n" />

</layout>

</appender>

<root>

<priority value="trace" />

<appender-ref ref="knime" />

</root>

</log4j:configuration>

After I restart KNIME Analytics Platform nothing about logging changes. It produces its log where it always does and my target directory remains empty.

Hi @jan_lender,

I am sorry for the late reply I hope I can still help you out:

the best way to create a custom config is to start out with the original configuration file, it can be found in your workspace as .metadata/log4j3.xml. Make a copy and edit as desired, then point your KNIME Analytics Platform to it, either via the vm parameter, or by replacing the .metada/log4j3.xml file. You can always revert to the defaults by deleting this file and restarting KNIME Analytics Platform, it will get recreated during the startup process.

As an example I just added an appender to the config file as follows:

<appender name="rollingFile" class="org.apache.log4j.RollingFileAppender">

<param name="file" value="/home/gabriel/log/application.log" />

<param name="MaxFileSize" value="10MB" />

<param name="MaxBackupIndex" value="5" />

<layout class="org.apache.log4j.PatternLayout">

<param name="ConversionPattern" value="%d{yyyy-MM-dd HH:mm:ss} %-5p %m%n" />

</layout>

</appender>

Don’t forget to add it to the root logger config as well, which is located at the end of the config file :

<root>

<level value="error" />

<appender-ref ref="stdout" />

<appender-ref ref="stderr" />

<appender-ref ref="logfile" />

<appender-ref ref="knimeConsole" />

<appender-ref ref="rollingFile" />

</root>

best,

Gabriel

Hi there,

Thanks for this explanation. Writing errors in another log file than knime.log worked for me. But do you know if it is possible to have one log file per workflow ?

Because for now all errors of all workflows are written in the same file and it does not help locating the error.

Thanks in advance

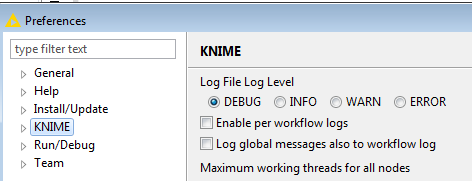

Hi, I already checked this it just selects the type of log to put in a file (Debug, info…etc)

but it does not create one log file per workflow.

Do you have more information about “enable per workflow logs” because for me nothing changes.

Thanks

Hi @Amine,

just tested it and option Enable per workflow logs does create log file for each workflow. Where have you searched workflow log file? It is under workflow folder where node folders are.

Br,

Ivan

Hi,

I didn’t check in those folders, thank you very much !

What is the best way to create one file per run? To avoid the knime.log file overwriting data

Hi @ijoel92,

I would try with an external logic(script) that would parse log file. You can create KNIME workflow that would start such script.

Br,

Ivan

Hi All!

I’m facing a similar issue. I’ve read several posts about logging and information level but there’s not a way for me to do it successfully. Let me explain myself.

My goal

Execute script.bat to launch a KNIME workflow and retrieve the log at INFO level in a certain location.

My problem

I’m able to retrieve log file to specific .txt file in the desired folder using stodut. However, I’ve tried to edit log4j3.xml file to allow INFO level in the log file but there is always WARN level. I’m not sure what i’m missing.

FYI: KNIME GUI info level is set to INFO level (but i’ve read it doesn’t matter for console execution.

I think the solution is not far, but i’m not able to see how to fix it. If someone has any hints or tips regarding what i’m missing it’s really welcome.

Details

KNIME version: 4.2.3

log4j3.xml:

<!DOCTYPE log4j:configuration SYSTEM "log4j.dtd">

<log4j:configuration xmlns:log4j="http://jakarta.apache.org/log4j/">

<appender name="consoleDebug" class="org.apache.log4j.ConsoleAppender">

<layout class="org.apache.log4j.PatternLayout">

<param name="ConversionPattern" value="%-5p\t %t %c{1}\t %.10000m\n" />

</layout>

<filter class="org.apache.log4j.varia.LevelRangeFilter">

<param name="levelMin" value="INFO" />

<param name="levelMax" value="ERROR" />

</filter>

</appender>

<appender name="stdout" class="org.apache.log4j.ConsoleAppender">

<layout class="org.apache.log4j.PatternLayout">

<param name="ConversionPattern" value="%-5p\t %t %c{1}\t %.10000m\n" />

</layout>

<filter class="org.apache.log4j.varia.LevelRangeFilter">

<param name="levelMin" value="INFO" />

<param name="levelMax" value="ERROR" />

</filter>

</appender>

<appender name="stderr" class="org.apache.log4j.ConsoleAppender">

<param name="Target" value="System.err" />

<layout class="org.apache.log4j.PatternLayout">

<param name="ConversionPattern" value="%-5p\t %t %c{1}\t %.10000m\n" />

</layout>

<filter class="org.apache.log4j.varia.LevelRangeFilter">

<param name="levelMin" value="ERROR" />

</filter>

</appender>

<appender name="batchexec" class="org.apache.log4j.ConsoleAppender">

<layout class="org.apache.log4j.PatternLayout">

<param name="ConversionPattern" value="%-5p\t %t %c{1}\t %.10000m\n" />

</layout>

<filter class="org.apache.log4j.varia.LevelRangeFilter">

<param name="levelMin" value="INFO" />

<param name="levelMax" value="ERROR" />

</filter>

</appender>

<appender name="logfile" class="org.knime.core.util.LogfileAppender">

<layout class="org.knime.core.node.NodeLoggerPatternLayout">

<!-- Supported parameter are

%W = Workflow directory if available

the depth can be specified e.g. %W{2} prints the name of the workflow and the folder it is located in

if the depth is omitted the complete workflow directory is printed

%N = Node name if available

%Q = Qualifier as a combination of N (Node name) and c (category). Displays the category if the node name is not available.

the depth can be specified and is used for the category e.g. %Q{1} prints only last index of the category

if the depth is omitted the complete category is printed

%I = Node id if available

the depth can be specified e.g. %I{1} prints only last index of the node id

if the depth is omitted the complete node id is printed

%J = Job id if available

-->

<param name="ConversionPattern" value="%d{ISO8601} : %-5p : %t : %J : %c{1} : %N : %I : %m%n" />

</layout>

</appender>

<appender name="knimeConsole" class="org.apache.log4j.varia.NullAppender">

<!-- This appender is only used to retrieve the conversion pattern layout for the KNIME console output

that is why we use the NullApender that does no log to any file. -->

<layout class="org.knime.core.node.NodeLoggerPatternLayout">

<!-- Supported parameter are

%W = Workflow directory if available

the depth can be specified e.g. %W{2} prints the name of the workflow and the folder it is located in

if the depth is omitted the complete workflow directory is printed

%N = Node name if available

%Q = Qualifier as a combination of N (Node name) and c (category). Displays the category if the node name is not available.

the depth can be specified and is used for the category e.g. %Q{1} prints only last index of the category

if the depth is omitted the complete category is printed

%I = Node id if available

the depth can be specified e.g. %I{1} prints only last index of the node id

if the depth is omitted the complete node id is printed

%J = Job id if available

-->

<param name="ConversionPattern" value="%-5p %-20Q{1} %-10I %.10000m\n" />

<!-- Strict pattern that maintains the distance by truncating long qualifiers and node ids

<param name="ConversionPattern" value="%-5p %-35.35Q{1} %-20.20I %.10000m\n" />

-->

<!-- Compact log pattern.

<param name="ConversionPattern" value="%-5p %Q{1} %I %.10000m\n" />

-->

</layout>

</appender>

<!--

if you want to enable debug message for a specific package or class, add something like:

<logger name="org.knime.dev.node.xyz">

<appender-ref ref="debug"/>

</logger>

-->

<logger name="org.knime">

<level value="all" />

</logger>

<logger name="com.knime">

<level value="all" />

</logger>

<logger name="org.knime.core.node.workflow.BatchExecutor">

<appender-ref ref="batchexec" />

</logger>

<logger name="com.knime.product.headless.ReportBatchExecutor">

<appender-ref ref="batchexec" />

</logger>

<root>

<level value="info" />

<appender-ref ref="stdout" />

<appender-ref ref="stderr" />

<appender-ref ref="logfile" />

<appender-ref ref="knimeConsole" />

<appender-ref ref="consoleDebug" />

</root>

</log4j:configuration>