Scott Fincher and Cynthia Padilla from KNIME held a webinar on the new functionality now available in the release of KNIME Analytics Platform 4.2 and KNIME Server 4.11.0. Highlights include:

New Features for Enterprise Data Science Challenges:

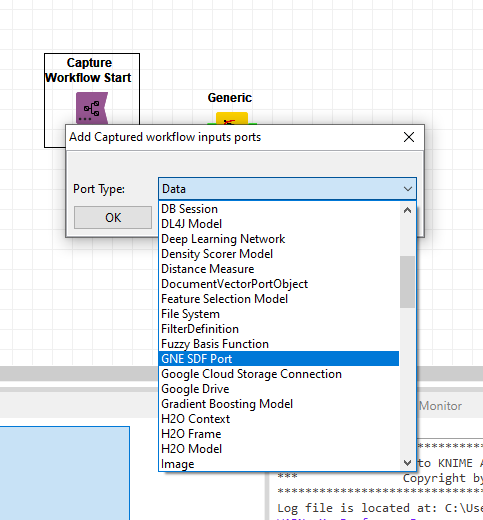

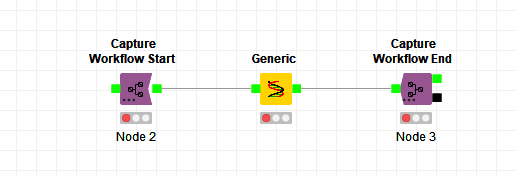

- Integrated Deployment

- Elastic and Hybrid Deployments

- Metadata Mapping with Workflow Summary

- Guided Analytics

New Sharing Features, Integrations, and Improved Performance for KNIME Users:

- Public and Private Spaces on KNIME Hub

- New Connector Nodes, e.g. Microsoft SharePoint, Amazon DynamoDB, Salesforce, and SAP Tensorflow2 integration

- Performance and UX Improvements

Here are the Q&As discussed at the webinar. Click on the arrow next to the question to see the answer.

Q&As

I believe SAP Theobald is paid integration service, can you tell us more about it?

Yes, right. Theobald is a separate commercial software. There’s a blog article about how SAP Theobald can be used in KNIME here.

Is there a way to install new 4.2 release without the need to uninstall an existing installed version?

In this release we couldn’t offer the update path due to larger changes to the underlying framework pieces (java and eclipse). This shouldn’t happen often - but sometimes it does. There’s been a bit of discussion about this in the forum - which might be interesting to read here and here.

Where can the new OAuth be enabled?

You’ll find more information in our documentation here.

Is there by chance a node yet for PATCH REST API?

Not yet, but we do have an internal ticket for it, and is something we are thinking about.

Is metadata mapping just for KNIME Server?

No - you can create workflow summaries with metadata mapping using KNIME Analytics Platform as well.

Do you have KNIME components to not only read but also write into Neo4j?

Yes, one of our partners has developed an extension to connect, read, and write from Noe4j. You can find the nodes in the KNIME Hub https://hub.knime.com/search?q=neo4j

KNIME in the cloud: How do you protect our data on the cloud and the network communication in between?

KNIME does not store any data nor does have access to your data. Data is only stored in a different location if you personally decide to do so, for instance by using a File or Database Writer node to store data in an alternative location. Users can connect to data via their credentials and additional security can be added by the administrator, for example SSH.

Is auto scaling available on Azure?

Not yet, but we are working on making this available soon.