Hi,

which is the best/easiest way to extract relevant data from JSON to a table?

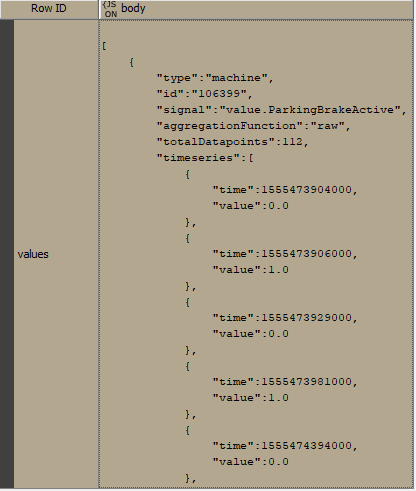

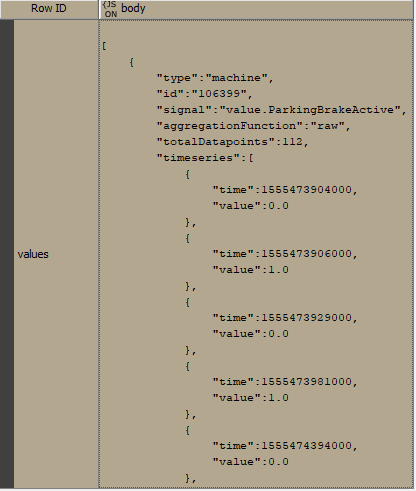

JSON looks like:

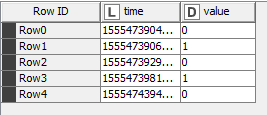

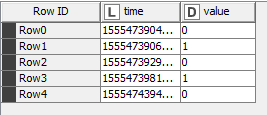

This is what my table should look like:

Thomas

Hi,

which is the best/easiest way to extract relevant data from JSON to a table?

JSON looks like:

This is what my table should look like:

Thomas

Hi Armin,

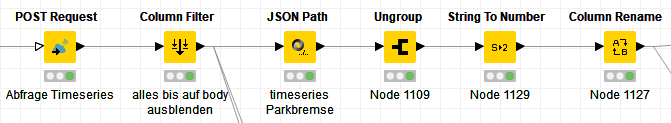

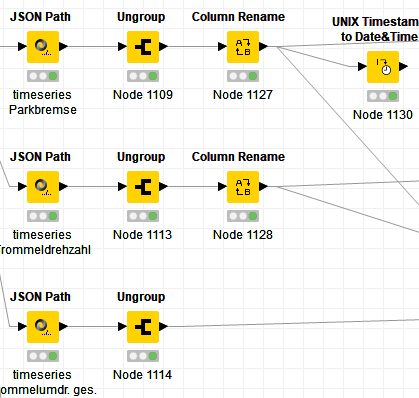

I used JSON Path - thanks for your suggestion, but it looks very complicated now:

I also have the problem that String to Number does only support one data type.

Is there a better way to do that?

Best,

Thomas

Is it possible for you to provide the JSON file or your workflow here?

A sample would help. Then I can do what you want and send it back to you.

In the meantime you may want to take a look at these nodes as well:

JSON to Table

JSON To XML: After converting your JSON to XML, you can use XPath node to extract your data. You may find working with XPath node easier as you can set the configuration to put values in multiple rows and retain the type you want so all the three steps in the picture (path, ungroup and conversion) can be done in a single step.

Best,

Armin

P.S. In the configuration window of the JSON Path node, you can double click on “Output column” and set the type so you don’t have to use “String To Number”. But you still need to ungroup in this case.

MachineData.json (24.0 KB)

Hi Armin,

Attached you will find my json data. As you see there are 3 different timeseries within the file. I used the JSONPath Online Evaluator (jsonpath.com) to find out the parameters for JSON path and was able to extract the timeseries of each signal (btw: what is the easiest way to iterate over the 3 different timeseries?).

Your tipp with the double click helped me to access my values!

So at the moment my workflow looks like this:

I tried “JSON to Table” but I was not able to extract my 3 timeseries.

Next I will try “JSON to XML”.

Best,

Thomas

Hey Tobi,

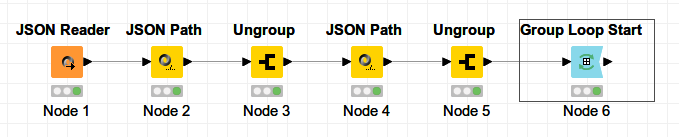

you can go this way with two JSON Path and iterate over the three series with group loop start

Hope it helps

Andrewjson_path.knwf (12.2 KB)

Actually it would be possible to extract all three elements in a single XPath node if the supported version was at least XPath 2.0. Using this XPath:

for $i in //signal return for $j in //signal[text()=$i]/following-sibling::timeseries/time return $i

would give us the signals in a way that can be input alongside the other two elements:

//time

//value

having all of them in multiple rows.

Maybe it’s possible to get the same output in XPath 1.0 but I do not know how.

It would be nice to see the XPath node supporting newer versions. @ScottF @ipazin

Best,

Armin

Thanks!

Hi Andrew,

I am also trying to convert my JSON file to table through KNIME, I have gone through the your workflow ‘json_path.knwf’ which helped me to understand the flow for json data parsing to some extent, but in my json I have nested JSON and inside nested , there is array of json. I am attaching the sample json. if you can help for this conversion. in output I am expecting 4 rows in total as below:

2 rows for “PsnameId”: “123-7” ( 1 for “RID”: “11” and 1 for “RID”: “12”)

2 rows for “PsnameId”: “897-1” ( 1 for “RID”: “11” and 1 for “RID”: “12”)

and remaining tags common to 4 rows.

mysample.json (1.1 KB)

I hope this is a possible solution. I extracted only the arrays Instances and Obserts and some other columns as example (mod, Person.Gender). The result is m*n, Invests * Obserts.

Best,

Andrew

jsonExample.knwf (13.3 KB)

Thanks a lot Andrew:)

Correct , even I have same question regarding this approach since this is just a sample json we had used,how can we more generalize the process to parse such JSON files, if number of entries in instances and Obserts are not same in all JSON files.

my answer was not correct. I’m sorry. The result is m*n, Invests * Obserts. I edited my first reply.

Best,

Andrew

Np Andrew and thanks for your quick clarification:)

So we can use this approach to parse JSON for as many entries in m and n , as the result would always be m*n

Thanks once again!

Can you please check below 2 doubts:-

Hi Andrew,

Regarding the previous answer, that the solution you provided will always give (Invests * Obserts),now there is slight change in requirement, instead of direct investObserts, in my output my expectation is- Invests having RID 11 should have two rows one for for RstID 11 and one for RstID 12 and Invests having RID 12 should have 1 row for RstID 13 and 1 row for RstID 14 , so total 4 rows(and not 24=8) in output and remaining fields will be common to these 4 rows. So we need to put some conditional statement for the expected output. JSON file is also modified with one more parameter for this condition.can you please check and guide me if it is possible. Sampe JSON file is attached.maybe you ceck the attached JSOn and tell me what condition I have to put to achieve this output.mysample12.json (1.7 KB)

If I want to read more than 1 JSON files(same pattern but with different data) at the same time and parse into the table at the same time,Is that possible through this approach?

here is a more generic approach. It based on your nested JsonFile with followed Arrays:

So you will find more than 1 JsonPath Ungroup Combinations.

I’ve separated the Instance and Obsert-Arrays in two parallel flows and joined the data after extraction.

Based on your request to process more than one json file parallel, I embedded the workflow in two loops:

first a Parallel Chunk Loop to parallel the followed workflow and then a Table Row to Variable Loop Start for each JsonFile within a chunk, if there are more files per chunk.

An example result with 3 JsonFiles:

BTW: There is an error in your mysample12.json File: the ObsertsArray must be [11,12] or [“11”,“12”].

Best regards

Andrew

jsonExample.knwf (29.9 KB)

Data.knar (2.1 KB)

Hi @Andrew_Steel,

Thanks a lot for the solution.

For reading more than 2 JSON files, I want to read files from S3 location directly which can be around 10-20 files at a time.Is it possible to read these JSON files from S3 location through List Files node?

I think so. There a some Amazon S3 Nodes. Have a look at this example

01_Amazon_S3_Remote_File_Example

Best

Andrew

BTW: I can’t download the workflow with KNIME 4.0.0. Error: Unable to download workflow: Download failed: The entity is not backed by an input stream, entity class is : java.io.InputStream.

With KNIME 3.7.2 the download works fine.

Hi @ipazin,

I can’t download any of the workflows from the example server with knime 4.0.0. Neither on my workstation nor on my laptop. On both I use Ubuntu 18.04.2 LTS.

So far no error has occurred when I used KNIME 4.0.0 in the past. The import and export of workflows from the forum works fine.

At work I installed a fresh KNIME 4.0.0 with OS CentOS 7 and the download works fine too.

OS problems?

Best

Andrew